Support Questions

- Cloudera Community

- Support

- Support Questions

- Wrong runlevels of CDH packages for usage with Clo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Wrong runlevels of CDH packages for usage with Cloudera Manager (according to host inspector)

Created on 12-15-2015 01:01 AM - edited 09-16-2022 02:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello community,

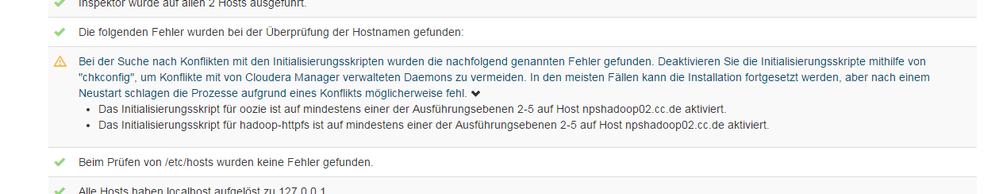

One question regarding Cloudera installation with Cloudera Manager and CDH packages (Path B) under RedHat 6.5:

After installating all the required packages with the command

sudo yum install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-hdfs-nfs3 hadoop-httpfs hadoop-kms hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie pig pig-udf-datafu search sentry solr-mapreduce spark-core spark-master spark-worker spark-history-server spark-python sqoop sqoop2 whirrthe host inspector is reporting problems on that hosts concerning runlevels of the services:

In essence that oozie and hadoop-httpfs have actived initialization scripts on runlevels 2-5.

Is this an actual problem for my cluster?

And why do the Cloudera Packages, which are only used in this context, do have incorrect runlevel configuration for the packages?

May there also be incorrect runlevels for other services?

Thanks for your support.

Best regards,

Benjamin

Created 12-15-2015 08:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Ben

What I did (on ubuntu) is to look for Hadoop services in /etc/init.d and then turn them off using rc-update.d. Can't you pipe the output of your script to grep and then chkconfig --level 2345 - off?

Created 12-16-2015 12:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey vkurien,

thanks for your reply. I did as you suggested and turned them off with

for service in avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-hdfs-nfs3 hadoop-httpfs hadoop-kms hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie pig pig-udf-datafu search sentry solr-mapreduce spark-core spark-master spark-worker spark-history-server spark-python sqoop sqoop2 whirr; do sudo chkconfig $service off; done

I'm just surprised that I did exactly follow the installation Path B and it does not work out as expected.

Can somebody please confirm that this is intended behaviour or some kind of bug?

Created 12-15-2015 02:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One addition to that: I searched for other CDH services having init scripts for certain runlevels and found:

- hadoop-hdfs-nfs3

- spark-history-server

- spark-master

- spark-worker

For all of those, init scripts for runlevels 3-5 are active.

After a reboot, some of them actually started up (although the node was not yet started from CM):

Checking runlevels and status for service: hadoop-hdfs-nfs3 Hadoop HDFS NFS v3 service is running [ OK ] hadoop-hdfs-nfs3 0:off 1:off 2:off 3:on 4:on 5:on 6:off Checking runlevels and status for service: spark-history-server Spark history-server is dead and pid file exists [FAILED] spark-history-server 0:off 1:off 2:off 3:on 4:on 5:on 6:off Checking runlevels and status for service: spark-master Spark master is running [ OK ] spark-master 0:off 1:off 2:off 3:on 4:on 5:on 6:off Checking runlevels and status for service: spark-worker Spark worker is dead and pid file exists [FAILED] spark-worker 0:off 1:off 2:off 3:on 4:on 5:on 6:off

Are you aware of these issues with packages?

btw: The output was created from the script:

#!/bin/bash

for i in hadoop-hdfs-nfs3 spark-history-server spark-master spark-worker; do

echo "Checking runlevels and status for service: $i"

service $i status

chkconfig --list | grep $i

echo ""

done

Created 12-15-2015 08:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Ben

What I did (on ubuntu) is to look for Hadoop services in /etc/init.d and then turn them off using rc-update.d. Can't you pipe the output of your script to grep and then chkconfig --level 2345 - off?

Created 12-16-2015 12:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey vkurien,

thanks for your reply. I did as you suggested and turned them off with

for service in avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-hdfs-nfs3 hadoop-httpfs hadoop-kms hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie pig pig-udf-datafu search sentry solr-mapreduce spark-core spark-master spark-worker spark-history-server spark-python sqoop sqoop2 whirr; do sudo chkconfig $service off; done

I'm just surprised that I did exactly follow the installation Path B and it does not work out as expected.

Can somebody please confirm that this is intended behaviour or some kind of bug?

Created 12-16-2015 09:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

BTW if you are installing with a base VM image even more has to be done, such as removing UUIDs

Created 12-16-2015 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi vkurien, Could you please elaborate on that? Like with UUIDs, do you refer to the Cloudera Manager Agent UUID. that has to be individual per node?

Created 12-16-2015 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, and so if you start from a "golden" VM image, make sure that the UUID is deleted from the image. I'm more paranoid so my scripts turn things off, make sure that the UUID is gone, then start the agents and finally the server.

Created on 12-16-2015 03:00 PM - edited 12-16-2015 03:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for those insights. I recently got into trouble having the same UUID on all nodes, so I learned that the hard way 😉

Just for completness: The CM Agent UUID is stored in /var/lib/cloudera-scm-agent/uuid

Do you have additional similar hints when automating cluster deployment using CM and custom scripting?

Created 12-16-2015 03:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm making a foray into the API soon so I'm sure that I'll know more then.

- Ensure /tmp has noexec off. This is an obvious security issue but sometimes one just wants a working cluster.

- Trying to install downlevel versions of CDH is mostly a disaster on Ubuntu since the repo lists are wrong and the installation instructions (part B) happily download the wrong repo list on the agent machines and use that. This results in mysterious failures later.

- Does anyone actually test the instructions - likely not!