Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: hdfs groups not returning mapped groups using ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

hdfs groups not returning mapped groups using Kerberos/LDAP

Created on

12-30-2019

05:21 AM

- last edited on

12-30-2019

07:02 AM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

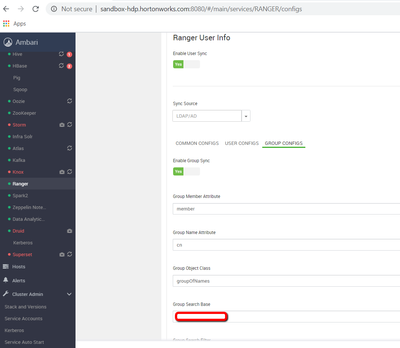

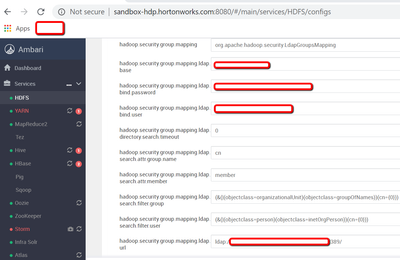

I have installed the HDP sandbox HDP-3.0.1.0 (3.0.1.0-187) using Docker and Kerberized the cluster.

I also spinned up an LDAP server and setup User- and Group-sync with Ranger.

Finally I have also set HDFS advanced core-site configuration.

I can run an ldapsearch succesfully.

However hdfs groups doesn't seem to work.

I really have no idea on where to look from here. Any help would be greatly appreciated.

Created 01-06-2020 04:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just had a hunch and restarted ambari-server using

ambari-server restart

This is not directed in the documentation! https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/ambari-authentication-ldap-ad/content/amb_ldap...

This makes the LDAP login work succesfully!

Created 12-30-2019 10:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check the value in Ambari---> Ranger---> Configs---> Advanced---> Advanced *ranger-ugsync-site * if that is appropriate for the HDP version else adjust

ranger.usersync.policymanager.mockrun=true means usersync is disabled as its a mock run set it to false this will trigger the usersync

if not can you share the ambari-server log?

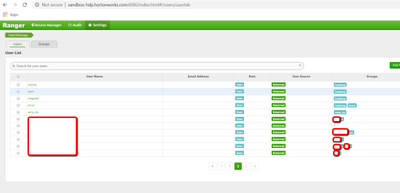

Ranger could also be looking for attribute uid if your users have cn rather than uid it did retrieve the users and groups from LDAP but not insert them in the database

Hope that helps

Created 12-31-2019 03:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shelton thank you for your reply.

I'm not sure if you want me to put the mockrun variable to true or false. It was set to false, i tried setting it to true (added below is the log after this change).

Also just to make sure: the users do sync to Ranger. Also the groups are synced correctly. I just don't see these groups when I run hdfs groups. I expect to see the group for the currently authenticated Kerberos principal...

Here is a link to the full ambari server log (zipped 1.7MB, full file is 17MB): https://gofile.io/?c=W9aQaf

2019-12-31 10:47:46,737 INFO [ambari-client-thread-3218] AgentHostDataHolder:108 - Configs update with hash 51484f76230ae56f22862429a1 57308022346391646887cd119ee001122c7daabbef9fe6d649e69107764db55cf4d3a5ccb93aed3f7ff6ebf1081d4ed430e320 will be sent to host 1

2019-12-31 10:48:01,074 INFO [ambari-client-thread-37] StackAdvisorRunner:55 - StackAdvisorRunner. serviceAdvisorType=PYTHON, actionDirectory=/var/run/ambari-server/stack-recommendations/3, command=recommend-configurations

2019-12-31 10:48:01,110 INFO [ambari-client-thread-37] StackAdvisorRunner:64 - StackAdvisorRunner. Expected files: hosts.json=/var/run/ambari-server/stack-recommendations/3/hosts.json, services.json=/var/run/ambari-server/stack-recommendations/3/services.json, output=/var/run/ambari-server/stack-recommendations/3/stackadvisor.out, error=/var/run/ambari-server/stack-recommendations/3/stackadvisor.err

2019-12-31 10:48:01,110 INFO [ambari-client-thread-37] StackAdvisorRunner:77 - StackAdvisorRunner.runScript(): Calling Python Stack Advisor.

2019-12-31 10:48:11,764 INFO [ambari-client-thread-37] StackAdvisorRunner:167 - Advisor script stdout: Returning DefaultStackAdvisor implementation

2019-12-31 10:48:07,557 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Memory for YARN apps - cluster[totalAvailableRam]: 17408

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - user operation context : RecommendAttribute

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Full context: callContext = recommendConfigurations and operation = RecommendAttribute and adding YARN = False and old value exists = None

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Using user provided yarn.scheduler.minimum-allocation-mb = 256

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Minimum ram per container due to user input - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Containers per node - cluster[containers]: 3

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Ram per containers before normalization - cluster[ramPerContainer]: 5802

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Min container size - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for map - cluster[mapMemory]: 5632

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for reduce - cluster[reduceMemory]: 5632

2019-12-31 10:48:07,558 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for am - cluster[amMemory]: 5632

2019-12-31 10:48:07,576 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service AMBARI_INFRA_SOLR was loaded

2019-12-31 10:48:07,586 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service ATLAS was loaded

2019-12-31 10:48:07,595 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service DATA_ANALYTICS_STUDIO was loaded

2019-12-31 10:48:07,612 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service DRUID was loaded

2019-12-31 10:48:07,630 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service HBASE was loaded

2019-12-31 10:48:07,659 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service HDFS was loaded

2019-12-31 10:48:07,695 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service HIVE was loaded

2019-12-31 10:48:07,731 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service KAFKA was loaded

2019-12-31 10:48:07,812 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service KNOX was loaded

2019-12-31 10:48:07,822 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service MAPREDUCE2 was loaded

2019-12-31 10:48:07,840 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service OOZIE was loaded

2019-12-31 10:48:07,848 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service PIG was loaded

2019-12-31 10:48:07,884 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service RANGER was loaded

2019-12-31 10:48:07,885 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service SPARK2 was loaded

2019-12-31 10:48:07,903 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service SQOOP was loaded

2019-12-31 10:48:07,921 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service STORM was loaded

2019-12-31 10:48:07,938 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service SUPERSET was loaded

2019-12-31 10:48:07,957 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service TEZ was loaded

2019-12-31 10:48:08,011 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service YARN was loaded

2019-12-31 10:48:08,012 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service ZEPPELIN was loaded

2019-12-31 10:48:08,039 INFO DefaultStackAdvisor instantiateServiceAdvisor: - ServiceAdvisor implementation for service ZOOKEEPER was loaded

2019-12-31 10:48:08,066 INFO DefaultStackAdvisor constructAtlasRestAddress: - Constructing atlas.rest.address=<a href="http://sandbox-hdp.hortonworks.com:21000" target="_blank">http://sandbox-hdp.hortonworks.com:21000</a>

2019-12-31 10:48:08,066 INFO HDP30DATAANALYTICSSTUDIOServiceAdvisor getServiceConfigurationRecommendations: - Conf recommendations for DAS

2019-12-31 10:48:08,066 INFO HDP30DATAANALYTICSSTUDIOServiceAdvisor getServiceConfigurationRecommendations: - configuring knox ...

2019-12-31 10:48:08,067 INFO HDP30DATAANALYTICSSTUDIOServiceAdvisor getServiceConfigurationRecommendations: - knox host: sandbox-hdp.hortonworks.com, knox port: 8443

2019-12-31 10:48:08,067 INFO HDP30DATAANALYTICSSTUDIOServiceAdvisor getServiceConfigurationRecommendations: - setting up proxy hosts

2019-12-31 10:48:08,288 INFO DruidServiceAdvisor getServiceConfigurationRecommendations: - Class: DruidServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,323 INFO DefaultStackAdvisor recommendHBASEConfigurationsFromHDP26: - Not setting Hbase Repo user for Ranger.

2019-12-31 10:48:08,324 INFO DefaultStackAdvisor setHandlerCounts: - hbaseRam=8192, cores=8

2019-12-31 10:48:08,324 INFO DefaultStackAdvisor setHandlerCounts: - phoenix_enabled=True

2019-12-31 10:48:08,324 INFO DefaultStackAdvisor setHandlerCounts: - Setting HBase handlers to 70

2019-12-31 10:48:08,324 INFO DefaultStackAdvisor setHandlerCounts: - Setting Phoenix index handlers to 20

2019-12-31 10:48:08,350 INFO HDFSServiceAdvisor getServiceConfigurationRecommendations: - Class: HDFSServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,351 INFO DefaultStackAdvisor recommendConfigurationsFromHDP206: - Class: HDFSRecommender, Method: recommendConfigurationsFromHDP206. Recommending Service Configurations.

2019-12-31 10:48:08,379 INFO DefaultStackAdvisor recommendConfigurationsFromHDP206: - Class: HDFSRecommender, Method: recommendConfigurationsFromHDP206. Total Available Ram: 17408

2019-12-31 10:48:08,387 INFO DefaultStackAdvisor recommendConfigurationsFromHDP206: - Class: HDFSRecommender, Method: recommendConfigurationsFromHDP206. HDFS nameservices: None

2019-12-31 10:48:08,413 INFO DefaultStackAdvisor recommendConfigurationsFromHDP206: - Class: HDFSRecommender, Method: recommendConfigurationsFromHDP206. Updating HDFS mount properties.

2019-12-31 10:48:08,422 INFO DefaultStackAdvisor recommendConfigurationsFromHDP206: - Class: HDFSRecommender, Method: recommendConfigurationsFromHDP206. HDFS Data Dirs: ['/hadoop/hdfs/data']

2019-12-31 10:48:08,439 INFO DefaultStackAdvisor recommendConfigurationsFromHDP206: - Class: HDFSRecommender, Method: recommendConfigurationsFromHDP206. HDFS Datanode recommended reserved size: 263173188

2019-12-31 10:48:08,440 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for HDFS service.

2019-12-31 10:48:08,440 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for SPARK2 service.

2019-12-31 10:48:08,440 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for YARN service.

2019-12-31 10:48:08,440 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for HIVE service.

2019-12-31 10:48:08,440 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Host List for [service='HIVE'; user='hive'; components='HIVE_SERVER,HIVE_SERVER_INTERACTIVE']: sandbox-hdp.hortonworks.com

2019-12-31 10:48:08,440 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for OOZIE service.

2019-12-31 10:48:08,457 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for FALCON service.

2019-12-31 10:48:08,457 INFO DefaultStackAdvisor _getHadoopProxyUsersForService: - Calculating Hadoop Proxy User recommendations for SPARK service.

2019-12-31 10:48:08,457 INFO DefaultStackAdvisor recommendHadoopProxyUsers: - Updated hadoop.proxyuser.hdfs.hosts as : *

2019-12-31 10:48:08,457 INFO DefaultStackAdvisor recommendHadoopProxyUsers: - Updated hadoop.proxyuser.hive.hosts as : sandbox-hdp.hortonworks.com

2019-12-31 10:48:08,457 INFO DefaultStackAdvisor recommendHadoopProxyUsers: - Updated hadoop.proxyuser.livy.hosts as : *

2019-12-31 10:48:08,458 INFO DefaultStackAdvisor recommendConfigurationsFromHDP26: - Setting HDFS Repo user for Ranger.

2019-12-31 10:48:08,476 INFO HiveServiceAdvisor getServiceConfigurationRecommendations: - Class: HiveServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,493 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Memory for YARN apps - cluster[totalAvailableRam]: 17408

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getConfigurationClusterSummary: - user operation context : RecommendAttribute

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Full context: callContext = recommendConfigurations and operation = RecommendAttribute and adding YARN = False and old value exists = None

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Using user provided yarn.scheduler.minimum-allocation-mb = 256

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Minimum ram per container due to user input - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Containers per node - cluster[containers]: 3

2019-12-31 10:48:08,494 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Ram per containers before normalization - cluster[ramPerContainer]: 5802

2019-12-31 10:48:08,512 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Min container size - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:08,528 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for map - cluster[mapMemory]: 5632

2019-12-31 10:48:08,528 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for reduce - cluster[reduceMemory]: 5632

2019-12-31 10:48:08,528 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for am - cluster[amMemory]: 5632

2019-12-31 10:48:08,528 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:08,529 INFO DefaultStackAdvisor recommendHiveConfigurationsFromHDP30: - DBG: Setting 'num_llap_nodes' config's READ ONLY attribute as 'True'.

2019-12-31 10:48:08,529 INFO DefaultStackAdvisor recommendHiveConfigurationsFromHDP30: - Setting Hive Repo user for Ranger.

2019-12-31 10:48:08,546 INFO DefaultStackAdvisor recommendKAFKAConfigurationsFromHDP26: - Not setting Kafka Repo user for Ranger.

2019-12-31 10:48:08,600 INFO DefaultStackAdvisor getServiceConfigurationRecommendations: - Class: MAPREDUCE2ServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,601 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,601 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,610 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP206: - Class: MAPREDUCE2Recommender, Method: recommendYARNConfigurationsFromHDP206. Recommending Service Configurations.

2019-12-31 10:48:08,618 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Memory for YARN apps - cluster[totalAvailableRam]: 17408

2019-12-31 10:48:08,618 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:08,618 INFO DefaultStackAdvisor getConfigurationClusterSummary: - user operation context : RecommendAttribute

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Full context: callContext = recommendConfigurations and operation = RecommendAttribute and adding YARN = False and old value exists = None

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Using user provided yarn.scheduler.minimum-allocation-mb = 256

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Minimum ram per container due to user input - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Containers per node - cluster[containers]: 3

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Ram per containers before normalization - cluster[ramPerContainer]: 5802

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Min container size - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for map - cluster[mapMemory]: 5632

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for reduce - cluster[reduceMemory]: 5632

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for am - cluster[amMemory]: 5632

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:08,619 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,636 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,637 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,637 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,637 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP22: - Updated 'capacity-scheduler' configs as one concatenated string.

2019-12-31 10:48:08,637 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,637 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,655 INFO OozieServiceAdvisor getServiceConfigurationRecommendations: - Class: OozieServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,656 INFO DefaultStackAdvisor recommendOozieConfigurationsFromHDP30: - No oozie configurations available

2019-12-31 10:48:08,673 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,673 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,673 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,674 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,693 INFO SupersetServiceAdvisor getServiceConfigurationRecommendations: - Class: SupersetServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,711 INFO TezServiceAdvisor getServiceConfigurationRecommendations: - Class: TezServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:08,711 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,711 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:08,719 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:08,719 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:11,013 INFO DefaultStackAdvisor recommendTezConfigurationsFromHDP26: - Updated 'tez-site' config 'tez.task.launch.cmd-opts' and 'tez.am.launch.cmd-opts' as : -XX:+PrintGCDetails -verbose:gc -XX:+PrintGCTimeStamps -XX:+UseNUMA -XX:+UseG1GC -XX:+ResizeTLAB{{heap_dump_opts}}

2019-12-31 10:48:11,014 INFO DefaultStackAdvisor recommendTezConfigurationsFromHDP30: - Updated 'tez-site' config 'tez.history.logging.proto-base-dir' and 'tez.history.logging.service.class'

2019-12-31 10:48:11,040 INFO YARNServiceAdvisorf getServiceConfigurationRecommendations: - Class: YARNServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:11,084 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP206: - Class: YARNRecommender, Method: recommendYARNConfigurationsFromHDP206. Recommending Service Configurations.

2019-12-31 10:48:11,136 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Memory for YARN apps - cluster[totalAvailableRam]: 17408

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - user operation context : RecommendAttribute

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Full context: callContext = recommendConfigurations and operation = RecommendAttribute and adding YARN = False and old value exists = None

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Using user provided yarn.scheduler.minimum-allocation-mb = 256

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Minimum ram per container due to user input - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Containers per node - cluster[containers]: 3

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Ram per containers before normalization - cluster[ramPerContainer]: 5802

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Min container size - cluster[yarnMinContainerSize]: 256

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for map - cluster[mapMemory]: 5632

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for reduce - cluster[reduceMemory]: 5632

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getConfigurationClusterSummary: - Available memory for am - cluster[amMemory]: 5632

2019-12-31 10:48:11,137 INFO DefaultStackAdvisor getCallContext: - call type context : {'call_type': 'recommendConfigurations'}

2019-12-31 10:48:11,209 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:11,217 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:11,217 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:11,217 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:11,218 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP22: - Updated 'capacity-scheduler' configs as one concatenated string.

2019-12-31 10:48:11,218 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:11,218 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:11,218 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - Setting Yarn Repo user for Ranger.

2019-12-31 10:48:11,244 INFO DefaultStackAdvisor calculate_yarn_apptimelineserver_cache_size: - Calculated and returning 'yarn_timeline_app_cache_size' : 10

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - Updated YARN config 'yarn.timeline-service.entity-group-fs-store.app-cache-size' as : 10, using 'host_mem' = 32917000

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - Updated YARN config 'apptimelineserver_heapsize' as : 8072,

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is yarn_restyps: '[]'.

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is docker_allowed_devices: '[]'.

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is docker_allowed_volume-drivers: '[]'.

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is docker.allowed.ro-mounts: '[]'.

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is yarn.nodemanager.resource-plugins.gpu.allowed-gpu-devices: '[u'auto']'.

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is yarn.nodemanager.resource-plugins.gpu.docker-plugin: '[u'nvidia-docker-v1']'.

2019-12-31 10:48:11,245 INFO DefaultStackAdvisor recommendYARNConfigurationsFromHDP26: - new what is yarn.nodemanager.resource-plugins.gpu.docker-plugin.nvidiadocker-v1.endpoint: '[u'http://localhost:3476/v1.0/docker/cli']'.

2019-12-31 10:48:11,263 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - 'capacity-scheduler' configs is passed-in as a single '\n' separated string. count(services['configurations']['capacity-scheduler']['properties']['capacity-scheduler']) = 21

2019-12-31 10:48:11,263 INFO DefaultStackAdvisor getCapacitySchedulerProperties: - Retrieved 'capacity-scheduler' received as dictionary : 'False'. configs : [('yarn.scheduler.capacity.default.minimum-user-limit-percent', '100'), ('yarn.scheduler.capacity.root.default.acl_administer_queue', 'yarn'), ('yarn.scheduler.capacity.node-locality-delay', '40'), ('yarn.scheduler.capacity.root.accessible-node-labels', '*'), ('yarn.scheduler.capacity.root.capacity', '100'), ('yarn.scheduler.capacity.schedule-asynchronously.enable', 'true'), ('yarn.scheduler.capacity.maximum-am-resource-percent', '0.5'), ('yarn.scheduler.capacity.maximum-applications', '10000'), ('yarn.scheduler.capacity.root.default.user-limit-factor', '1'), ('yarn.scheduler.capacity.root.default.maximum-capacity', '100'), ('yarn.scheduler.capacity.root.acl_submit_applications', 'yarn,ambari-qa'), ('yarn.scheduler.capacity.root.default.acl_submit_applications', 'yarn,yarn-ats'), ('yarn.scheduler.capacity.root.default.state', 'RUNNING'), ('yarn.scheduler.capacity.root.default.capacity', '100'), ('yarn.scheduler.capacity.root.acl_administer_queue', 'yarn,spark,hive'), ('yarn.scheduler.capacity.root.queues', 'default'), ('', ''), ('yarn.scheduler.capacity.schedule-asynchronously.maximum-threads', '1'), ('yarn.scheduler.capacity.root.default.acl_administer_jobs', 'yarn'), ('yarn.scheduler.capacity.schedule-asynchronously.scheduling-interval-ms', '10'), ('yarn.scheduler.capacity.resource-calculator', 'org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator')]

2019-12-31 10:48:11,264 INFO ZookeeperServiceAdvisor getServiceConfigurationRecommendations: - Class: ZookeeperServiceAdvisor, Method: getServiceConfigurationRecommendations. Recommending Service Configurations.

2019-12-31 10:48:11,281 INFO ZookeeperServiceAdvisor recommendConfigurations: - Class: ZookeeperServiceAdvisor, Method: recommendConfigurations. Recommending Service Configurations.

2019-12-31 10:48:11,281 INFO ZookeeperServiceAdvisor recommendConfigurations: - Setting zoo.cfg to default dataDir to /hadoop/zookeeper on the best matching mount

2019-12-31 10:48:11,764 INFO [ambari-client-thread-37] StackAdvisorRunner:167 - Advisor script stderr:

Created 12-31-2019 04:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This may be slightly off topic, but when doing AD/LDAP Integration, I always start with the Ambari LDAP part first. This helps me identify the settings required to connect from Ambari -> LDAP, and the correct settings for user sync and group sync (sometimes different). While executing this command I also tail the log file for additional output that is required to debug the connection, and sync parts. After completing these steps, I then have clear idea of what settings can be used for Ranger.

Helpful Links:

Created 12-31-2019 04:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@stevenmatison thank you for your reply, however I've setup Kerberos as authentication method, not LDAP... https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/authentication-with-kerberos/content/configuri...

Created 12-31-2019 05:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The post says LDAP, and are you not trying to sync the LDAP users and groups to ranger? Wouldn't knowing what the required settings for each sync be helpful?

Kerberos is another beast, which I personally would not complete until ambari and ranger integration is already completed.

Created 12-31-2019 06:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@stevenmatison i first Kerberized the cluster. The Ranger user/group sync is indeed via LDAP. I might now have all the concepts straight, but from what I understand Kerberos is used for authentication whereas LDAP is used for authorization?

I can get a Kerberos ticket using kinit, which then makes the hdfs groups call using that ticket. I'm not sure how it matches the Kerberos authenticated user to the LDAP user/group (which is exactly the problem i'm facing)...

Created 01-02-2020 04:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@stevenmatison well I've setup Ambari/LDAP integration. The LDAP users show up in the Ambari user admin, and I've assigned one of them as Ambari administrator. However it is not possible to login with this user due to invalid credentials.

INFO [ambari-client-thread-111] AmbariAuthenticationEventHandlerImpl:136 - Failed to authenticate custom.user (attempt #1): Unable to sign in. Invalid username/password combination.

I can login with the user in the LDAP admin interface, just logging into Ambari doesn't work. The username must be correct, because otherwise the error message states:

INFO [ambari-client-thread-303] AmbariAuthenticationEventHandlerImpl:136 - Failed to authenticate cn=custom.user,dc=customdomain,dc=com: The user does not exist in the Ambari databaseCreated 01-02-2020 05:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems like you do not have the right settings.

I would look into the CN value. You may be syncing the users at a DN higher level, and then the auth string is invalid because the CN value is wrong.

Sorry I can't be more helpful, but as I suggest in my original post, going through the ambari ldap setup, will help identify what the correct strings are. It is usually a challenge even if someone says "here are all the settings".

Created 01-06-2020 03:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@stevenmatison thanks for your continuing reply.

I really can't make sense of it. I respect that this is probably not related to Ambari/HDP anymore but the issue is that actually I am at the point of: "here are all the settings". I keep redeploying both the LDAP server and the Sandbox from a fresh install... 🙂

LDAP server / Admin

docker run -d -p 389:389 -e SLAPD_PASSWORD=testing -e SLAPD_DOMAIN=example.com dinkel/openldapdocker run -p 6443:443 --env PHPLDAPADMIN_LDAP_HOSTS=localhost --detach osixia/phpldapadmin:0.9.0Inside the admin i create a posixGroup (testers) and posixAccount (testing)

Then inside the sandbox

ambari-admin-password-reset

ambari-server setup-ldap

Using python /usr/bin/python

Currently 'no auth method' is configured, do you wish to use LDAP instead [y/n] (y)? y

Please select the type of LDAP you want to use (AD, IPA, Generic LDAP):Generic LDAP

Primary LDAP Host (ldap.ambari.apache.org): localhost

Primary LDAP Port (389):

Secondary LDAP Host <Optional>:

Secondary LDAP Port <Optional>:

Use SSL [true/false] (false):

User object class (posixUser): posixAccount

User ID attribute (uid):

Group object class (posixGroup): groupOfNames

Group name attribute (cn):

Group member attribute (memberUid): member

Distinguished name attribute (dn):

Search Base (dc=ambari,dc=apache,dc=org): dc=example,dc=com

Referral method [follow/ignore] (follow):

Bind anonymously [true/false] (false):

Bind DN (uid=ldapbind,cn=users,dc=ambari,dc=apache,dc=org): cn=admin,dc=example,dc=com

Enter Bind DN Password:

Confirm Bind DN Password:

Handling behavior for username collisions [convert/skip] for LDAP sync (skip):

Force lower-case user names [true/false]:

Results from LDAP are paginated when requested [true/false]:

====================

Review Settings

====================

Primary LDAP Host (ldap.ambari.apache.org): localhost

Primary LDAP Port (389): 389

Use SSL [true/false] (false): false

User object class (posixUser): posixAccount

User ID attribute (uid): uid

Group object class (posixGroup): groupOfNames

Group name attribute (cn): cn

Group member attribute (memberUid): member

Distinguished name attribute (dn): dn

Search Base (dc=ambari,dc=apache,dc=org): dc=example,dc=com

Referral method [follow/ignore] (follow): follow

Bind anonymously [true/false] (false): false

Handling behavior for username collisions [convert/skip] for LDAP sync (skip): skip

ambari.ldap.connectivity.bind_dn: cn=admin,dc=example,dc=com

ambari.ldap.connectivity.bind_password: *****

Save settings [y/n] (y)? y

Saving LDAP properties...

Enter Ambari Admin login: admin

Enter Ambari Admin password:

Saving LDAP properties finished

Ambari Server 'setup-ldap' completed successfully.And sync

ambari-server sync-ldap --all

Using python /usr/bin/python

Syncing with LDAP...

Enter Ambari Admin login: admin

Enter Ambari Admin password:

Fetching LDAP configuration from DB.

Syncing all...

Completed LDAP Sync.

Summary:

memberships:

removed = 0

created = 0

users:

skipped = 0

removed = 0

updated = 0

created = 1

groups:

updated = 0

removed = 0

created = 0

Ambari Server 'sync-ldap' completed successfully.

I make the user admin in Ambari

But whatever value i use for login it just won't work 😞

- ttester (uid)

INFO [ambari-client-thread-244] AmbariAuthenticationEventHandlerImpl:136 - Failed to authenticate ttester (attempt #1): Unable to sign in. Invalid username/password combination.- tester.tester (cn)

INFO [ambari-client-thread-33] AmbariLocalAuthenticationProvider:71 - User not found: tester.tester

INFO [ambari-client-thread-33] AmbariAuthenticationEventHandlerImpl:136 - Failed to authenticate tester.tester: The user does not exist in the Ambari database- cn=tester.tester,dc=example,dc=com (cn)

INFO [ambari-client-thread-240] AmbariLocalAuthenticationProvider:71 - User not found: cn=admin,dc=example,dc=com

INFO [ambari-client-thread-240] AmbariAuthenticationEventHandlerImpl:136 - Failed to authenticate cn=admin,dc=example,dc=com: The user does not exist in the Ambari database