Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: hive context save file

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

hive context save file

- Labels:

-

Apache Hive

Created 06-07-2016 07:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

I work with Hive- Context to load and manipulate data in my orc format. I would now please know how to save in the hdfs file the results of a sql queries ?

Help me please?

Here is my Hive-Context code, I would like to save the contents of hive_context in a file on my hdfs :

Thanks you in advance

from pyspark.sql import HiveContext from pyspark import SparkContext

sc =SparkContext()

hive_context = HiveContext(sc) qvol = hive_context.table("<bdd_name>.<table_name>") qvol.registerTempTable("qvol_temp") hive_context.sql("select * from qvol_temp limit 10").show()

Created 06-07-2016 07:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@alain TSAFACK please use saveAsHadoopFile while will write to hdfs

saveAsHadoopFile(<file-name>, <file output format>", compressionCodecClass="org.apache.hadoop.io.compress.GzipCodec")

or

hive_context.write.format("orc").save("test_orc")

Created 06-07-2016 07:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@alain TSAFACK please use saveAsHadoopFile while will write to hdfs

saveAsHadoopFile(<file-name>, <file output format>", compressionCodecClass="org.apache.hadoop.io.compress.GzipCodec")

or

hive_context.write.format("orc").save("test_orc")

Created on 06-07-2016 07:36 AM - edited 08-19-2019 03:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

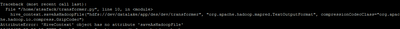

Thank you. But here are the errors generated by the two attributes saveAsHadoopFile and .write.format:

This means that these two attributes are not recognized by HiveContext.

Thank you !!!

Created 06-07-2016 07:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

saveAsHadoopFile is applicable for RDD and is not for DF, can you try hive_context.write.format("orc").save("test_orc")

Created 06-07-2016 07:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

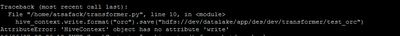

I tried with hive_context.write.format("orc").save("test_orc") but I receive this error:

>>> hive_context.write.format("orc").save("hdfs://dev/datalake/app/des/dev/transformer/test_orc") Traceback (most recent call last): File "<stdin>", line 1, in <module> AttributeError: 'HiveContext' object has no attribute 'write'

Thanks

Created 06-07-2016 11:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

could you please modify your program in this way and see if you still see any excepton

from pyspark import SparkConf, SparkContext

from pyspark.sql import HiveContext

sc = SparkContext()

sqlContext = HiveContext(sc)

sqlContext.sql("select * from default.aaa limit 3").write.format("orc").save("test_orc2")

Created 06-08-2016 08:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-09-2016 03:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content