Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: "Requested user hive is not whitelisted and ha...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

"Requested user hive is not whitelisted and has id 504,which is below the minimum allowed 1000" when running Hive query with explode function

- Labels:

-

Apache Ambari

-

Apache Hive

-

Apache Tez

Created on 03-21-2016 03:35 PM - edited 08-19-2019 01:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am using HDP 2.3.4 sandbox, the sandbox has been kerberoized.

I have loaded some struct and array types data into Hive, where the schema looks like this:

exampletable |-- listOfPeople: array (nullable = false) | |-- element: struct (containsNull = true) | | |-- Name: string (nullable = false) | | |-- id: integer (nullable = false) | | |-- Email: string (nullable = false) | | |-- holiday: array (nullable = false) | | | |-- element: integer (containsNull = true) |-- departmentName: string (nullable = false)

, and trying run this query in Hive View:

SELECT explode(listofpeople.name) AS name from exampletable;

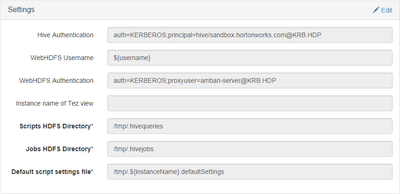

with these Hive View settings:

However I am getting these:

INFO : Tez session hasn't been created yet. Opening session ERROR : Failed to execute tez graph. org.apache.tez.dag.api.SessionNotRunning: TezSession has already shutdown. Application application_1458555995218_0002 failed 2 times due to AM Container for appattempt_1458555995218_0002_000002 exited with exitCode: -1000 For more detailed output, check application tracking page:http://sandbox.hortonworks.com:8088/cluster/app/application_1458555995218_0002Then, click on links to logs of each attempt. Diagnostics: Application application_1458555995218_0002 initialization failed (exitCode=255) with output: main : command provided 0 main : run as user is hive main : requested yarn user is hive Requested user hive is not whitelisted and has id 504,which is below the minimum allowed 1000 Failing this attempt. Failing the application. at org.apache.tez.client.TezClient.waitTillReady(TezClient.java:726) at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.open(TezSessionState.java:217) at org.apache.hadoop.hive.ql.exec.tez.TezTask.updateSession(TezTask.java:271) at org.apache.hadoop.hive.ql.exec.tez.TezTask.execute(TezTask.java:151) at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:160) at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:89) at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1703) at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1460) at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1237) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1101) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1096) at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:154) at org.apache.hive.service.cli.operation.SQLOperation.access$100(SQLOperation.java:71) at org.apache.hive.service.cli.operation.SQLOperation$1$1.run(SQLOperation.java:206) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657) at org.apache.hive.service.cli.operation.SQLOperation$1.run(SQLOperation.java:218) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745)

This thread kind of says this is the desire behaviour and google suggests to change the allowed.system.users in yarn-site (but it doesnt seems to work)

If I just want to run the query successfully on the sandbox what needs to be done? Or what is the best practice solution for this?

Thank you.

Created 03-21-2016 04:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is hortonworks documentation I read go to page 19

Each account must have a user ID that is greater than or equal to 1000. In the /etc/hadoop/conf/taskcontroller.cfg file, the default setting for the banned.users property is mapred, hdfs, and bin to prevent jobs from being submitted via those user accounts. The default setting for the min.user.id property is 1000 to prevent jobs from being submitted with a user ID less than 1000, which are conventionally Unix super users.

Hope that explains it

Created 03-21-2016 03:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you also changed the value of min.user.id. ?

Or you can change the id of hive user above 1000

Created 03-21-2016 03:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Rahul Pathak - yes I have as I said they dont seem to work...

Created 03-21-2016 04:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is hortonworks documentation I read go to page 19

Each account must have a user ID that is greater than or equal to 1000. In the /etc/hadoop/conf/taskcontroller.cfg file, the default setting for the banned.users property is mapred, hdfs, and bin to prevent jobs from being submitted via those user accounts. The default setting for the min.user.id property is 1000 to prevent jobs from being submitted with a user ID less than 1000, which are conventionally Unix super users.

Hope that explains it

Created 05-16-2016 11:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello I have forgotten about this, but at the end I have actually got it to work.

What needs to be done was using kadmin to create a new keytab, and and add principal ambari-server@KRB.HDP to the keytab. Also it needs a full restart of the sandbox.

See Setup Kerberos for Ambari Server

Thanks to @Geoffrey Shelton Okot for pointing the right direction