Community Articles

- Cloudera Community

- Support

- Community Articles

- COD - CDE Spark-HBase tutorial

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

02-02-2022

08:42 AM

- edited on

02-02-2022

08:56 PM

by

subratadas

COD - CDE Spark-HBase tutorial

In this article related to CDP Public Cloud, we will walk through steps required to be followed to read/ write to COD (Cloudera Operational Database) from Spark on CDE (Cloudera Data Engineering) using spark-hbase connector.

If you are looking to leverage Phoenix instead, please refer to this community article.

Assumption

- COD is already provisioned and database is created. Refer to this link for the same.

For this example, we will assume "amallegni-cod" as the database name. - CDE is already provisioned and the virtual cluster is already created. Refer to this link for the same.

COD

Download client configuration

Spark in CDE to be able to talk to COD, would require the hbase-site.xml config of the COD cluster. Do the following steps to retrieve the same:

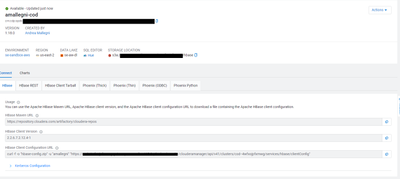

- Go to the COD control plane UI and click on "amallegni-cod" database.

- Under the Connect tab of COD database, look for HBase Client Configuration URL field. Following is the screenshot for the same.

The configuration can be downloaded using the following curl command.

curl -f -o "hbase-config.zip" -u "<YOUR WORKLOAD USERNAME>" "https://cod--4wfxojpfxmwg-gateway.XXXXXXXXX.cloudera.site/clouderamanager/api/v41/clusters/cod--4wfxojpfxmwg/services/hbase/clientConfig"

- Make sure to provide the "workload" password for the above curl call.

- Explore the downloaded zip file to obtain the hbase-site.xml file.

Create the HBase table

In this example, we are going to use COD database HUE to quickly create a new HBase table inside our COD database. Let's walk through this step by step:

- Go to the COD control plane UI and click on "amallegni-cod" database.

- Click on the Hue link.

Once in Hue, click on the HBase menu item on the left sidebar, and then click on the New Table button in the top right corner:

Choose your table name and column families, and then click on the Submit button.

For the sake of this example, let's call the table 'testtable' and let's create a single column family called 'testcf'.

CDE

For Spark code complete examples, refer to HbaseRead.scala and this HBaseWrite.scala examples.

Configure your Job via CDE CLI

To configure the job via CDE CLI, perform these steps:

- Configure CDE CLI to point to the virtual cluster created in the above step. For more details, see Configuring the CLI client.

- Create resources using the following command.

cde resource create --name cod-spark-resource - Upload hbase-site.xml

cde resource upload --name cod-spark-resource --local-path /your/path/to/hbase-site.xml --resource-path conf/hbase-site.xml - Upload the demo app jar that was built earlier.

cde resource upload --name cod-spark-resource --local-path /path/to/your/spark-hbase-project.jar --resource-path spark-hbase-project.jar - Create the CDE job using a JSON definition which should look like this:

{

"mounts": [

{

"resourceName": "cod-spark-resource"

}

],

"name": "my-cod-spark-job",

"spark": {

"className": "<YOUR MAIN CLASS>",

"conf": {

"spark.executor.extraClassPath": "/app/mount/conf",

"spark.driver.extraClassPath": "/app/mount/conf"

},

"args": [ "<YOUR ARGS IF ANY>"],

"driverCores": 1,

"driverMemory": "1g",

"executorCores": 1,

"executorMemory": "1g",

"file": "spark-hbase-project.jar",

"pyFiles": [],

"files": ["conf/hbase-site.xml"],

"numExecutors": 4

}

}

Finally, assuming the above JSON was saved into my-job-definition.json, import the job using the following command:

cde job import --file my-job-definition.json

Please note the spark.driver.extraClassPath and spark.executor.extraClassPath inside the job definition, pointing to the same path we used to upload the hbase-site.xml into our CDE resource.

This is important since this way the hbase-site.xml will be automatically loaded from the classpath and you won't need to refer to it explicitly in your Spark code, hence, you will only need to do like this:

val conf = HBaseConfiguration.create()

val hbaseContext = new HBaseContext(spark.sparkContext, conf)

If you prefer CDE UI

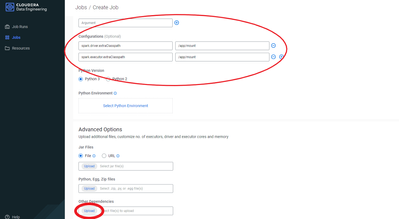

If you prefer using the UI instead, you'll have to take the following into account (please find the screenshot below):

- In your CDE job configuration page, the hbase-site.xml should be uploaded under Advanced options > Other dependencies.

- At the time of this article, it is not possible to specify a path for a file inside a CDE resource or for files uploaded under Other dependencies. For this reason, in your job definition you should use /app/mount as the value for your spark.driver.extraClassPath and spark.executor.extraClassPathvariables.

In your CDE job configuration page, you should set this variable inside the Configurations section.

Created on 07-19-2022 01:25 AM - edited 07-19-2022 01:25 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Please note the last screenshot has typos in it, while the article hasn't.

The correct configuration properties' names are the following (please note camel case):

spark.driver.extraClassPath

spark.executor.extraClassPath