Community Articles

- Cloudera Community

- Support

- Community Articles

- Deploy a Demo Druid/LLAP Cluster within Minutes Us...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-15-2018 08:05 PM - edited 09-16-2022 01:44 AM

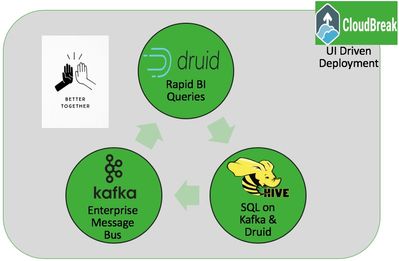

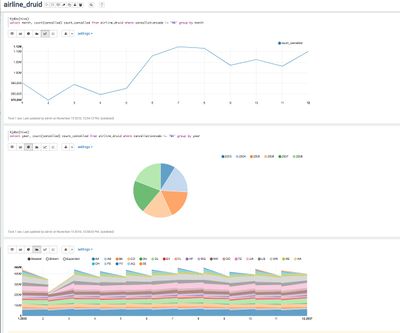

Objective of this article is to demonstrate how to rapidly deploy a demo Druid & LLAP cluster preloaded with 20 years (nearly 113 million records) of airline data ready for analytics using CloudBreak on any IaaS. Entire deployment is UI driven without the need for a large overhead of administration. All artifacts mentioned in this article are publicly available for reuse to try on your own

Prolegomenon

Time series is an incredible capability highly leveraged within the IoT space. Current solution sets offer non scalable & expensive or distributed processing engines lacking low latency OLAP speeds. Druid is an OLAP time series engine backed by a Lambda architecture. Druid out of the box SQL capabilities are severely limited and without join support. Layering HiveQL over Druid brings the best of both worlds. Hive 3 also offers HiveQL over Kafka essentially making Hive a true SQL federation engine. With Druid’s native integration with Kafka, streaming data from Kafka directly into Druid while executing real time SQL queries via HiveQL offers a comprehensive Time Series solution for the IoT space.

On with the demo....

To begin the demonstration, launch a CloudBreak deployer instance on any IaaS or on prem VM. Quick start makes this super simple. Launching CloudBreak deployer is well documented here.

Once the CloudBreak deployer is up, add your Azure, AWS, GCP, or OpenStack credentials within the CloudBreak UI. This will allow deployment of the same cluster on any IaaS.

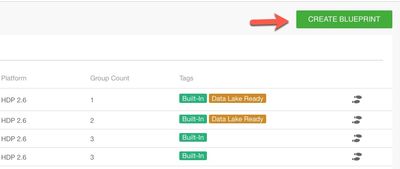

Druid Blue Print

- To launch a Druid/LLAP cluster, an Ambari blue print will be required. Click On Blueprints

- Click on CREATE BLUEPRINT

- Name the blue print and enter the following url to import it into CloudBreak

https://s3-us-west-2.amazonaws.com/sunileman1/ambari-blueprints/hdp3/druid+llap+Ambari+Blueprint

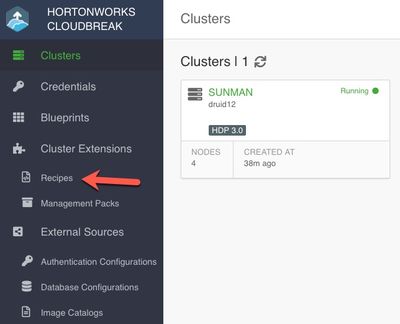

Recipes

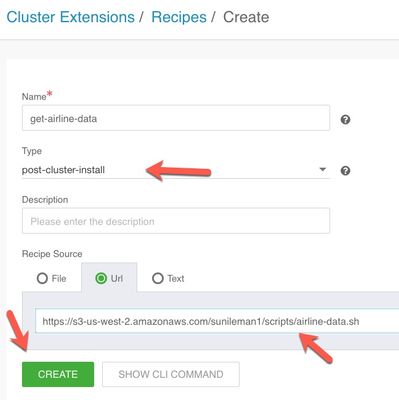

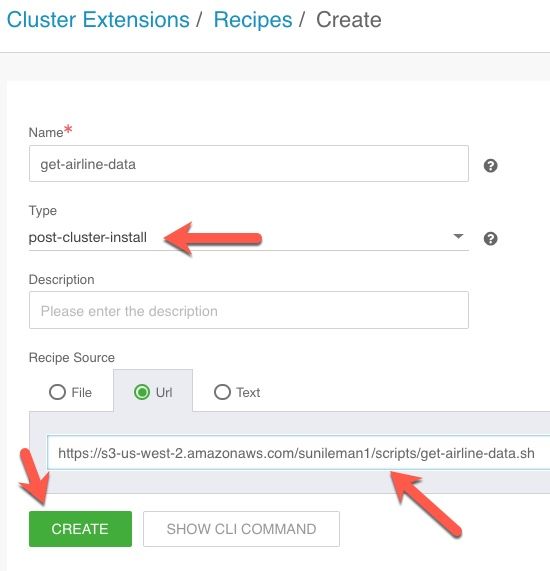

- Druid Requires a MetaStore. Import the recipe to create the MetaStore into CloudBreak

- Under Cluster Extensions, click on Recipes

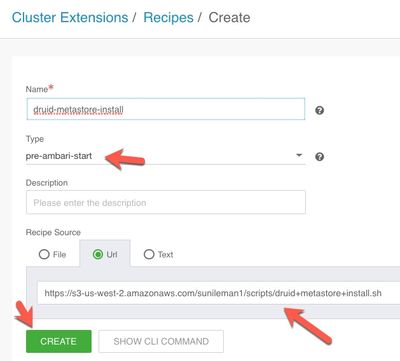

- Enter a name for the recipe and select "pre-ambari-start" to run this recipe prior to Ambari starting

- Under URL enter the following to import the recipe into CloudBreak

https://s3-us-west-2.amazonaws.com/sunileman1/scripts/druid+metastore+install.sh

- This cluster will come preloaded with 20 years of airline data

- Enter a name for recipe and select "post-cluster-install to run this recipe once HPD services are up

- Under URL enter the following to import the recipe into CloudBreak

https://s3-us-west-2.amazonaws.com/sunileman1/scripts/airline-data.sh

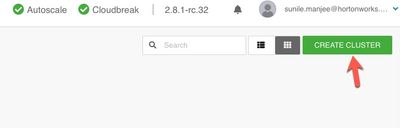

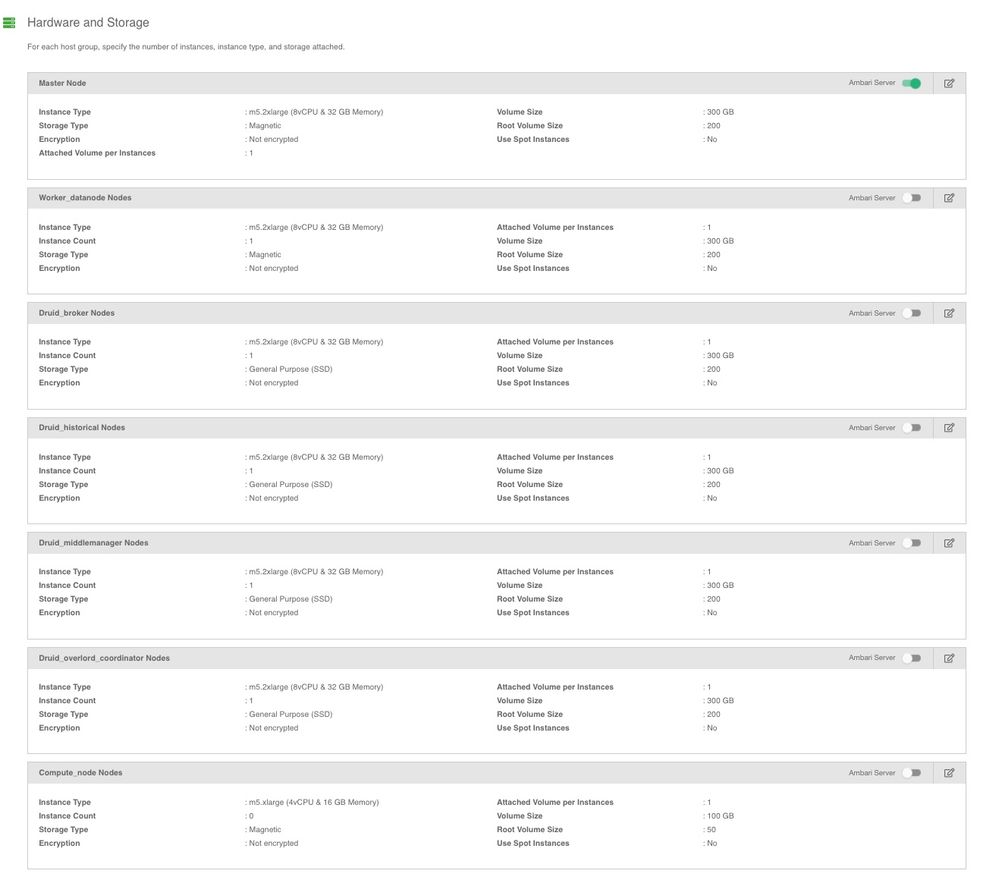

Create a Cluster

- Now that all recipes are in place, next step is to create a cluster

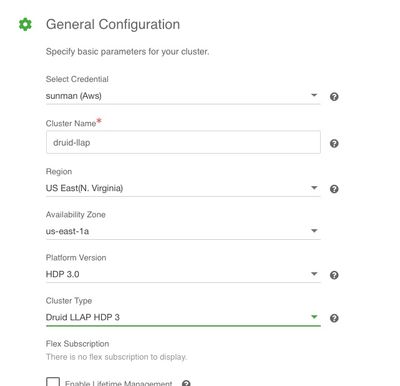

- Select a IaaS to deploy on (credential)

- Enter Cluster Name

- Select HDP 3.0

- Select cluster type: Druid LLAP HPD 3

- This is the new blue print which was imported in previous steps

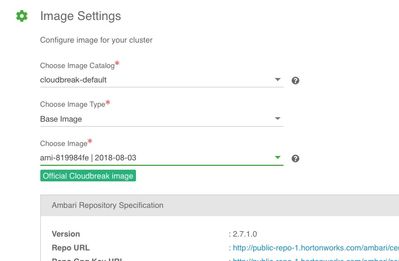

- Select an image. Base image will do for most deployments

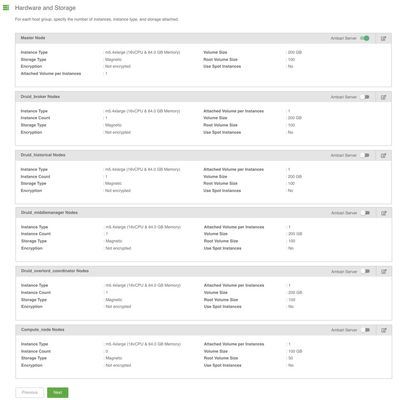

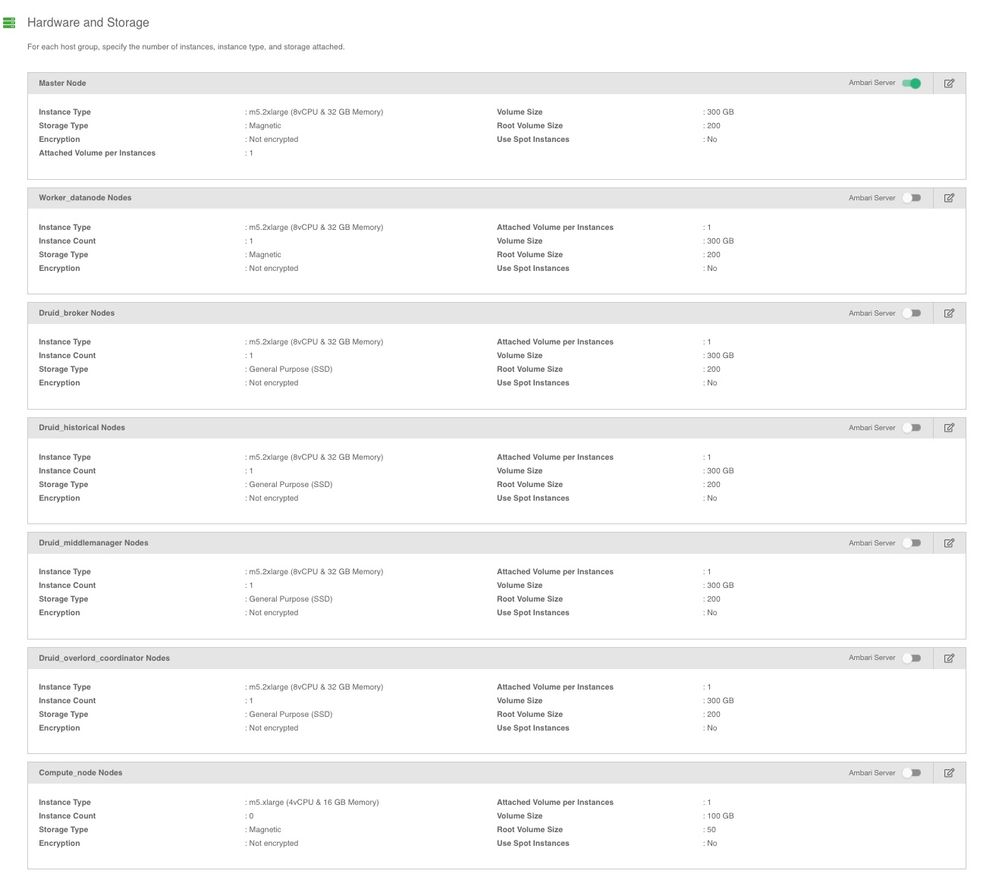

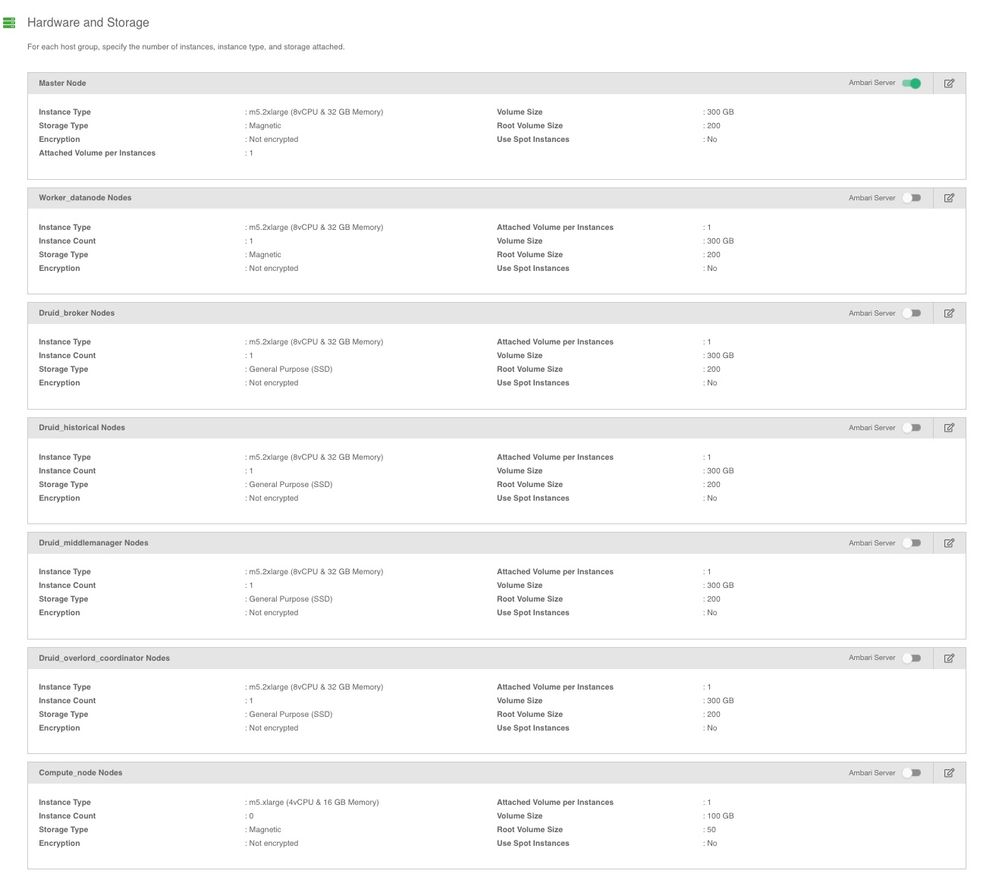

- Select instance types

- Note - I used 64 GB of ram per node. Additionally, I added 3 compute nodes.

- Select network to deploy the cluster on. If one is not pre-created, CloudBreak will create one

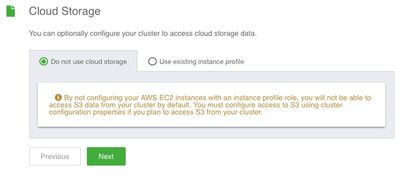

- CloudBreak can be configured to use S3, ADLS, WASB, GCS

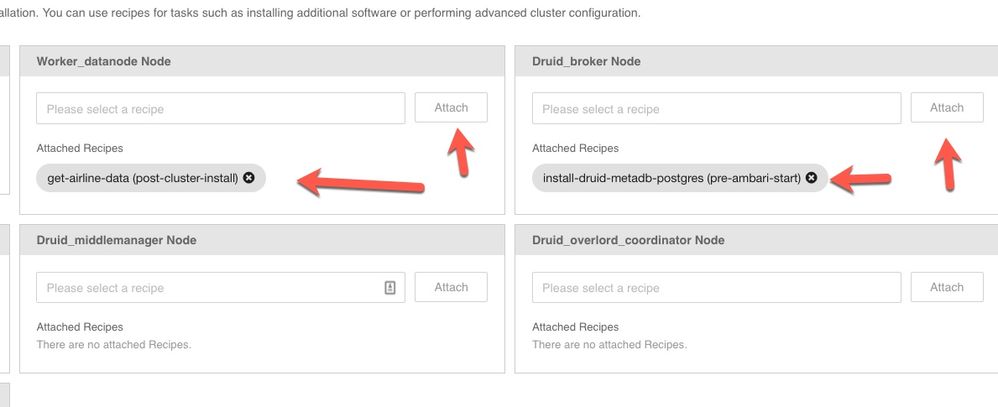

- On the Worker node attach get-airline-data recipe

- On the Druid Broker node attach druid-metastore-install

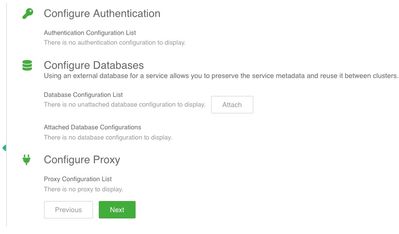

- External MetaStores (databases) can be bootstrapped to the cluster. This demo does not require it.

- Knox will not be used for this demo

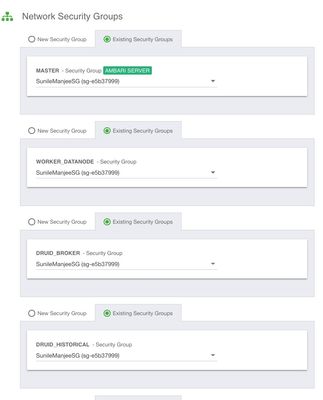

- Attach a security group to each host group. If a SG is not pre-created, CloudBreak will create one

- Lastly, provide an Ambari password and ssh key

- Cluster deployment and provision will begun. Within the next few minutes a cluster will be ready and an Ambari URL will be available.

- Zeppelin NoteBook can be imported using the url below.

https://s3-us-west-2.amazonaws.com/sunileman1/Zeppelin-NoteBooks/airline_druid.json

Here I demonstrated how to rapidly launch a Druid/LLAP cluster preloaded with airline data using CloudBreak. Enjoy Druid, it's crazy fast. HiveQL makes Druid easy to work with. CloudBreak makes the deployment super quick.

Created on 11-15-2019 03:12 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@sunile_manjee Hey, thanks for the detailed documentation. This cloudbreak-default image has old version of Druid Controller Console. I was wondering if there is latest image which has latest version of Druid installed.

Thanks

Kalyan

Created on 11-18-2019 08:29 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Kalyan77 Good question and I haven't tried yet. in the next few weeks I have an engagement which will require me to find out. will keep you posted.