Community Articles

- Cloudera Community

- Support

- Community Articles

- NiFi for Clickstream Log Ingestion into HBase + Ph...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-18-2016 09:57 AM - edited 08-17-2019 08:00 AM

Synopsis

- You are a marketing analyst tasked with developing a BI dashboard for management that reports on website activity in realtime throughout the day for Black Friday, the day after Thanksgiving.

- Unfortunately, all of the data engineers are busy with other projects and cannot immediately help you.

- Not to worry. With Hortonworks DataFlow, powered by Apache NiFi, you can easily create a data flow in minutes to process the data needed for the dashboard.

Source Data:

- Fictitious online retailer Initech Corporation

- JSON Formatted Clickstream data

- Your company stores the raw Clickstream log files in HDFS. The JSON formatted log files are written to HDFS directories partitioned by day and hour. For example:

- /data/clickstream/2016-11-20/hour=00

- /data/clickstream/2016-11-20/hour=01

- /data/clickstream/2016-11-20/hour=...

- /data/clickstream/2016-11-20/hour=23

- There could also be subdirectories under the hour

- This directory structure or a similar variant is commonly found in organizations when storing Clickstream logs

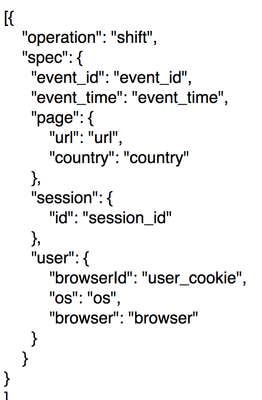

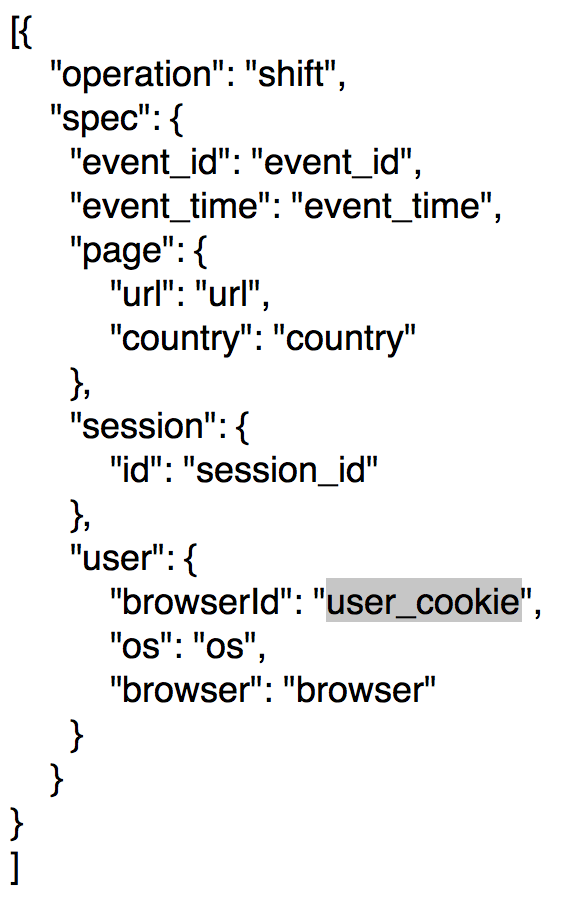

- The JSON data contains nested hierarchies. Sample JSON record:

Solution considerations:

- New files are written throughout the day for each hour and late arriving data is added to existing hours.

- Since files are constantly arriving, you need the data flow to always be on and running

- HBase is a fast and scalable option for the backend datastore:

- Data is semi-structured JSON

- HBase does not require a Schema

- HBase was designed for high throughput writes, whereas Hive is better suited for batch loads

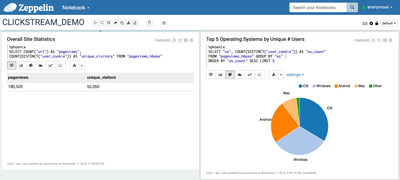

- Use Apache Phoenix to create a view atop the HBase table:

- Phoenix creates the schema for reporting

- Phoenix lets you write ANSI SQL queries against the HBase data

- Phoenix JDBC driver allows any BI tool to connect

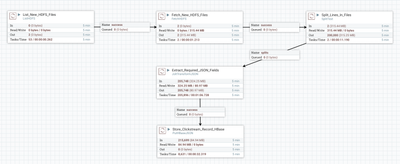

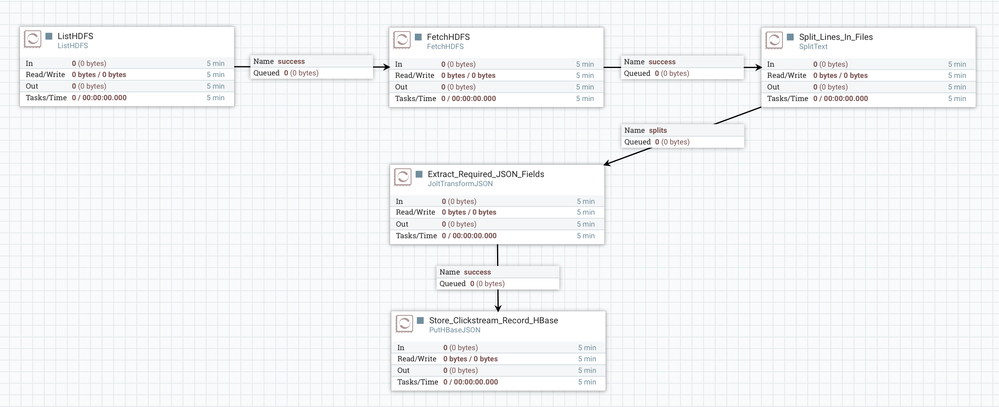

HDF DataFlow Overview:

* You may need to increase the heap size used by NiFi. You will know if/when you start getting the error: java.lang.OutOfMemoryError

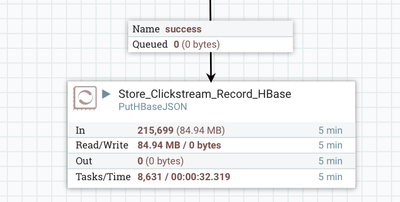

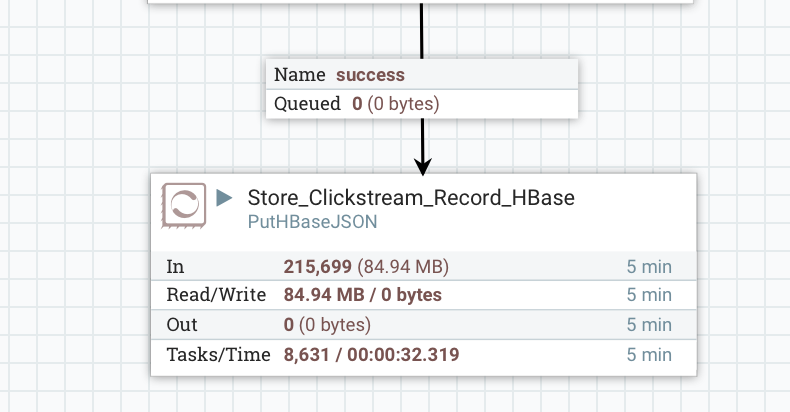

Throughput:

- HDF loaded 215,699 records into HBase in under 33 seconds

- I'm running HDF on a single node with 64 GB Ram and 16 cores

- There are other Hadoop and Hadoop related services running on this node including Node Manager, DataNode, Livy Server, RegionServer, Phoenix Query Server, NFSGateway, Metrics Monitor

Step 1:

Create the HBase table. You can easily do this via Apache Zeppelin.

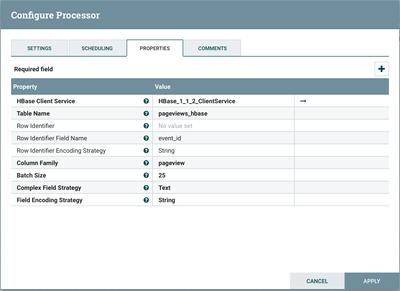

- Create 1 column family 'pageview'

- Multiple versions are not needed as there are no updates to this data. Each website hit is unique.

Step 2:

Create the Phoenix view. You can easily do this via Apache Zeppelin.

Step 3:

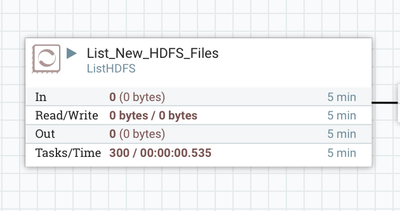

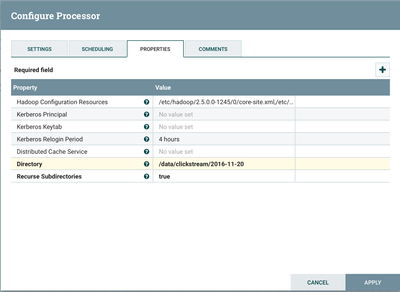

Configure the ListHDFS processor

- The ListHDFS processor will only list new files as they are written to directories

- The DataFlow will be constantly running so we do not want to process the same data twice. Thus, the ListHDFS processor will prevent us from processing the same data twice

- Our top-level directory is the Clickstream data for 2016-11-20

- ListHDFS will list all new files beneath the subdirectories under the top-level directory of 2016-11-20

- Notice that the value for 'Directory' is the top-level directory '/data/clickstream/2016-11-20'

- The value for 'Recursive Subdirectories' is set to 'true' so that all new files will be picked up as they are added

- The Hadoop Configuration Resources are the full path locations to core-site.xml and hdfs-site.xml

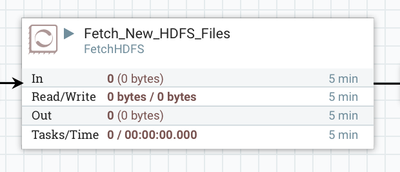

Step 4:

Configure the FetchHDFS processor

- The ListHDFS processor will list files

- FetchHDFS will retrieve the contents from the files listed

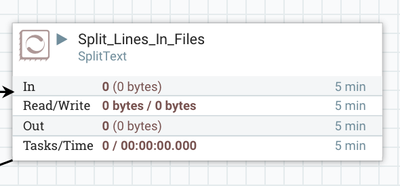

Step 5:

Use the SplitText processor to split each JSON record from the HDFS files

I used the processor as is without any changes, other than renaming it to 'Split_Lines_In_File'

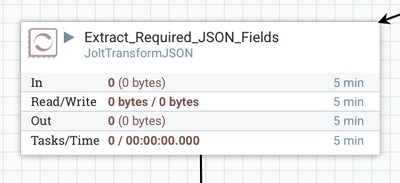

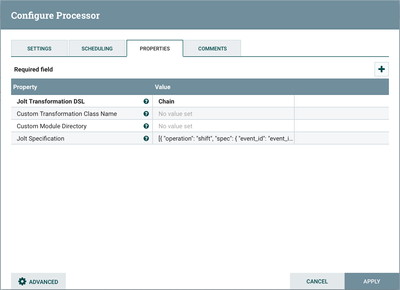

Step 6:

Configure the JoltTransformJSON processor to extract only those fields needed for the dashboard.

We only need the following fields from the JSON data:

- event ID - unique identifier for each website hit

- event time - time when the website hit occurred

- url - url of the page viewed

- country - country that the page was served

- session id - session id for the user

- user cookie

- os - the users device os

- browser - browser that the user used

Some of the above fields are child elements for a top-level parent. The property 'Jolt Specification' needs to be set so that we properly extract those fields:

Step 7:

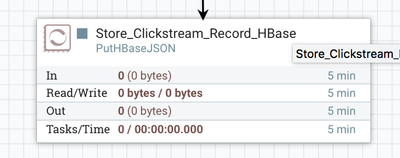

Configure the PutHBaseJSON processor to store the record.

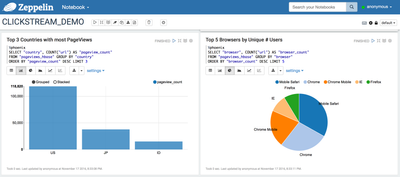

Apache Zeppelin Notebook:

Created on 11-18-2016 04:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

awesome @Binu Mathew

Created on 12-31-2016 03:25 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Binu Mathew : Thanks for sharing the awesome article. Do you mind to share the sample data?