Community Articles

- Cloudera Community

- Support

- Community Articles

- Securing Spark with Ranger using Zeppelin and Liv...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-15-2016 04:49 PM - edited 08-17-2019 08:10 AM

Pre-Requisites

This is a continuation of of "How to setup a multi user (Active Directory backed) zeppelin integrated with ldap and using Livy Re...

You should have a running HDP cluster, Zeppelin with Livy, Ranger.

Tested on HDP 2.5.

I will use 3 user accounts for demoing security: hadoopadmin, sales1, hr1. You can substitute accordingly to your environment. These users are synced from an LDAP/AD in my use case

Spark and HDFS security

We will Configure Ranger policies to:

- Protect /sales HDFS dir - so only sales group has access to it

- Protect sales hive table - so only sales group has access to it

Create /sales dir in HDFS as hadoopadmin

Create /sales dir in HDFS as hadoopadmin

sudo -u hadoopadmin hadoop fs -mkdir /sales sudo -u hadoopadmin hadoop fs -chown sales:hadoop /sales

Now login as sales1 and attempt to access it before adding any Ranger HDFS policy

sudo su - sales1 hdfs dfs -ls /sales

It fails with authorization error:

Permission denied: user=sales1, access=READ_EXECUTE, inode="/sales":hadoopadmin:hdfs:d---------

Lets create a policy in ranger that will allow the user now access /sales directory

Login into Ranger UI e.g. at http://RANGER_HOST_PUBLIC_IP:6080/index.html as admin/admin

In Ranger, click on 'Audit' to open the Audits page and filter by below.

Service Type: HDFS User: sales1 Notice that Ranger captured the access attempt and since there is currently no policy to allow the access, it was "Denied"

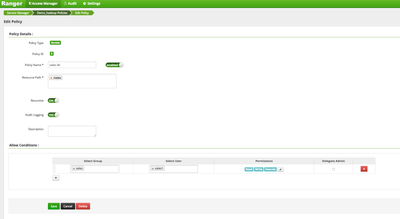

Create an HDFS Policy in Ranger, follow below steps:

On the 'Access Manager' tab click HDFS > (clustername)_hadoop

Click 'Add New Policy' button to create a new one allowing sales group users access to /sales dir: Policy Name: sales dir Resource Path: /sales Group: sales Permissions : Execute Read Write Add

Wait 30s for policy to take effect

Now try accessing the dir again as sales1 and now there is no error seen

hdfs dfs -ls /sales In Ranger, click on 'Audit' to open the Audits page and filter by below:

Service Type: HDFS User: sales1 Notice that Ranger captured the access attempt and since this time there is a policy to allow the access, it was Allowed

Now lets copy test data for sales user - run in command line as 'sales1' user

<code>wget https://raw.githubusercontent.com/roberthryniewicz/datasets/master/airline-dataset/flights/flights.c... -O /tmp/flights.csv # remove existing copies of dataset from HDFS hadoop fs -rm -r -f /sales/airflightsdelays # create directory on HDFS hadoop fs -mkdir /sales/airflightsdelays # put data into HDFS hadoop fs -put /tmp/flights.csv /sales/airflightsdelays/ hadoop fs -cat /sales/airflightsdelays/flights.csv | head

You should see an output similar to :

<code>[sales1@az1secure0 ~]$ wget https://raw.githubusercontent.com/roberthryniewicz/datasets/master/airline-dataset/flights/flights.c... -O /tmp/flights.csv --2016-11-15 16:28:24-- https://raw.githubusercontent.com/roberthryniewicz/datasets/master/airline-dataset/flights/flights.c... Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.52.133 Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.52.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 9719582 (9.3M) [text/plain] Saving to: ‘/tmp/flights.csv’ 100%[=========================================================================================>] 9,719,582 8.44MB/s in 1.1s 2016-11-15 16:28:26 (8.44 MB/s) - ‘/tmp/flights.csv’ saved [9719582/9719582] [sales1@az1secure0 ~]$ # remove existing copies of dataset from HDFS [sales1@az1secure0 ~]$ hadoop fs -rm -r -f /sales/airflightsdelays 16/11/15 16:28:28 INFO fs.TrashPolicyDefault: Moved: 'hdfs://az1secure0.field.hortonworks.com:8020/sales/airflightsdelays' to trash at: hdfs://az1secure0.field.hortonworks.com:8020/user/sales1/.Trash/Current/sales/airflightsdelays [sales1@az1secure0 ~]$ [sales1@az1secure0 ~]$ # create directory on HDFS [sales1@az1secure0 ~]$ hadoop fs -mkdir /sales/airflightsdelays [sales1@az1secure0 ~]$ [sales1@az1secure0 ~]$ # put data into HDFS [sales1@az1secure0 ~]$ hadoop fs -put /tmp/flights.csv /sales/airflightsdelays/ [sales1@az1secure0 ~]$ [sales1@az1secure0 ~]$ hadoop fs -cat /sales/airflightsdelays/flights.csv | head Year,Month,DayofMonth,DayOfWeek,DepTime,CRSDepTime,ArrTime,CRSArrTime,UniqueCarrier,FlightNum,TailNum,ActualElapsedTime,CRSElapsedTime,AirTime,ArrDelay,DepDelay,Origin,Dest,Distance,TaxiIn,TaxiOut,Cancelled,CancellationCode,Diverted,CarrierDelay,WeatherDelay,NASDelay,SecurityDelay,LateAircraftDelay 2008,1,3,4,2003,1955,2211,2225,WN,335,N712SW,128,150,116,-14,8,IAD,TPA,810,4,8,0,,0,NA,NA,NA,NA,NA 2008,1,3,4,754,735,1002,1000,WN,3231,N772SW,128,145,113,2,19,IAD,TPA,810,5,10,0,,0,NA,NA,NA,NA,NA 2008,1,3,4,628,620,804,750,WN,448,N428WN,96,90,76,14,8,IND,BWI,515,3,17,0,,0,NA,NA,NA,NA,NA 2008,1,3,4,926,930,1054,1100,WN,1746,N612SW,88,90,78,-6,-4,IND,BWI,515,3,7,0,,0,NA,NA,NA,NA,NA 2008,1,3,4,1829,1755,1959,1925,WN,3920,N464WN,90,90,77,34,34,IND,BWI,515,3,10,0,,0,2,0,0,0,32 2008,1,3,4,1940,1915,2121,2110,WN,378,N726SW,101,115,87,11,25,IND,JAX,688,4,10,0,,0,NA,NA,NA,NA,NA 2008,1,3,4,1937,1830,2037,1940,WN,509,N763SW,240,250,230,57,67,IND,LAS,1591,3,7,0,,0,10,0,0,0,47 2008,1,3,4,1039,1040,1132,1150,WN,535,N428WN,233,250,219,-18,-1,IND,LAS,1591,7,7,0,,0,NA,NA,NA,NA,NA 2008,1,3,4,617,615,652,650,WN,11,N689SW,95,95,70,2,2,IND,MCI,451,6,19,0,,0,NA,NA,NA,NA,NA cat: Unable to write to output stream.

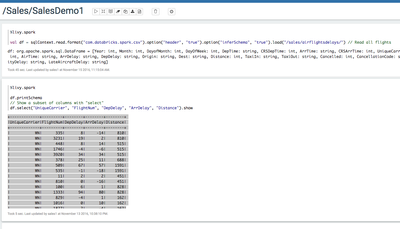

Now login as sales1 user in Zeppelin. Make sure livy is configured.

<code>%livy.spark

val df = sqlContext.read.format("com.databricks.spark.csv").option("header", "true").option("inferSchema", "true").load("/sales/airflightsdelays/") // Read all flights

df.printSchema

// Show a subset of columns with "select"

df.select("UniqueCarrier", "FlightNum", "DepDelay", "ArrDelay", "Distance").show

Your output should look like this:

<code>df: org.apache.spark.sql.DataFrame = [Year: int, Month: int, DayofMonth: int, DayOfWeek: int, DepTime: string, CRSDepTime: int, ArrTime: string, CRSArrTime: int, UniqueCarrier: string, FlightNum: int, TailNum: string, ActualElapsedTime: string, CRSElapsedTime: int, AirTime: string, ArrDelay: string, DepDelay: string, Origin: string, Dest: string, Distance: int, TaxiIn: string, TaxiOut: string, Cancelled: int, CancellationCode: string, Diverted: int, CarrierDelay: string, WeatherDelay: string, NASDelay: string, SecurityDelay: string, LateAircraftDelay: string] +-------------+---------+--------+--------+--------+ |UniqueCarrier|FlightNum|DepDelay|ArrDelay|Distance| +-------------+---------+--------+--------+--------+ | WN| 335| 8| -14| 810| | WN| 3231| 19| 2| 810| | WN| 448| 8| 14| 515| | WN| 1746| -4| -6| 515| ....

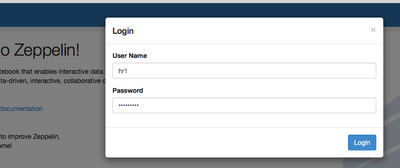

Now open a new browser for another user session hr1.

Create a new notebook as hr userr and re-run similar steps

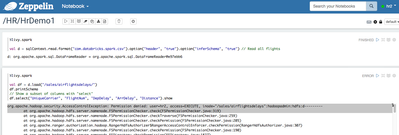

<code>%livy.spark

val df = sqlContext.read.format("com.databricks.spark.csv").option("header", "true").option("inferSchema", "true").load("/sales/airflightsdelays/") // Read all flights

df.printSchema

// Show a subset of columns with "select"

df.select("UniqueCarrier", "FlightNum", "DepDelay", "ArrDelay", "Distance").show

Your output should look like this:

<code>org.apache.hadoop.security.AccessControlException: Permission denied: user=hr2, access=EXECUTE, inode="/sales/airflightsdelays":hadoopadmin:hdfs:d---------

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

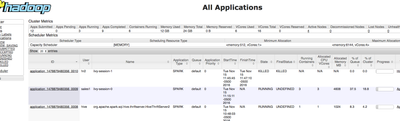

Lets login to YARN Resource Manager UI to confirm that the zeppelin sessions ran as the end user sales1 and hr2 :

Stay tuned for part 2 where we will show how to Protect sales hive table - so only sales group has access to it