Community Articles

- Cloudera Community

- Support

- Community Articles

- Troubleshooting Guide: dbt adapters

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-02-2022 01:35 PM - edited 06-26-2023 05:03 AM

This article lists issues that may arise while using dbt adapters and their resolution steps.

- Issue: Error recreating a dbt model in Impala which was originally created using dbt Hive

- Issue: Permission issue for table access

- Issue: Spark2 read managed tables issue via dbt

- Issue: SSL certificate failure or Invalid certificate error

- Issue: Permission issue while creating table

- Issue: Latency while executing dbt commands with dbt-spark-cde adapter

- Issue: Missing yarn.env file in current working directory

- Issue: Command '['tar', '-zcf', '/tmp/dbt-workspace.tar.gz', 'xxx']' returned non-zero exit status 2

- Issue: Troubleshooting Connection with Warehouse

Please reach out to us at innovation-feedback@cloudera.com if you see any issues not listed here.

Issue: Error recreating a dbt model in Impala which was originally created using dbt Hive

Description:

Recreating a dbt model in Impala which was originally created using dbt Hive produces an error similar to

MetaException: Cannot change stats state for a transactional table <table-name> without providing the transactional write state for verification (new write ID 1, valid write IDs null; current state {"BASIC_STATS":"true","COLUMN_STATS":{"id":"true"}}; new state {"BASIC_STATS":"true"}Resolution:

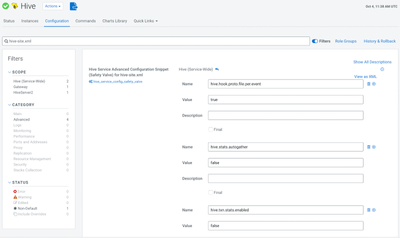

Solved this issue by setting the following two properties to false:

set hive.stats.autogather=false

set hive.txn.stats.enabled=false

In the Hive services and restarting. Follow the guide here on how to set properties for the hive service:

Setting Hive Configuration Overrides

Screenshot (of how the properties are set for the Hive service)

Additional Reference:

Issue: Permission issue for table access

Description:

While accessing Managed extended table you may see the error:

org.apache.spark.sql.AnalysisException:

Spark has no access to table `XXX`.`XXXXX`. Clients can access this table only if

they have the following capabilities: CONNECTORREAD,HIVEMANAGEDINSERTREAD,HIVEMANAGEDINSERTWRITE,HIVEMANAGESTATS,HIVECACHEINVALIDATE,CONNECTORWRITE.

This table may be a Hive-managed ACID table, or require some other capability that Spark

currently does not implement;

at org.apache.spark.sql.catalyst.catalog.CatalogUtils$.throwIfNoAccess(ExternalCatalogUtils.scala:280)

at org.apache.spark.sql.hive.HiveTranslationLayerCheck$SanonfunSapply$1.apply0rElse(HiveTranslationLayerStrategies.scala:109)

at org.apache.spark.sql.hive.HiveTranslationLayerCheck$SanonfunSapply$1.apply0rElse(HiveTranslationLayerStrategies.scala:85)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$SanonfunSresolveOperatorsDown$1$Sanonfun$2.apply(AnalysisHelper.scala:108)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$SanonfunSresolveOperatorsDown$1$Sanonfun$2.apply(AnalysisHelper.scala:108)

at org.apache.spark.sql.catalyst.trees.CurrentOriginS.withOrigin(TreeNode.scala:72)

at org.apache.spark.sql.catalyst.plans. logical.AnalysisHelper$SanonfunSresolveOperatorsDown$1.apply(AnalysisHelper.scala:107)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$SanonfunSresolveOperatorsDown$1.apply(AnalysisHelper.scala:106)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$.allowInvokingTransformsInAnalyzer(AnalysisHelper.scala:194)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelperSclass.resolveOperatorsDown(AnalysisHelper.scala:106)

at org.apache. spark. sql. catalyst.plans. logical.LogicalPlan. resolveOperatorsDown(LogicalPlan.scala: 29)

at org.apache.spark.sql.catalyst.plans. logical.AnalysisHelper$SanonfunSresolveOperatorsDown$1$SanonfunSapply$6.apply(AnalysisHelper.scala:113)

Resolution:

To solve this issue, we need the following configurations.

Property | Example Value | Description |

spark.sql.extensions | com.qubole.spark.hiveacid.HiveAcidAutoConvertExtension | Extension needed for auto-translate to work |

spark.kryo.registrator | com.qubole.spark.hiveacid.util.HiveAcidKyroRegistrator | For using kryo serialization. |

spark.sql.hive.hwc.execution.mode | spark |

|

spark.driver.extraClassPath | local:/opt/cloudera/parcels/CDH/lib/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.7.1.8.0-801.jar | to use HWC(Hive Warehouse Connector) jars. |

spark.executor.extraClassPath | local:/opt/cloudera/parcels/CDH/lib/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.7.1.8.0-801.jar |

|

The hive Warehouse connector jar should be supplied along with the configs to be able to access managed tables. You can do the above by either:

- Specify properties in the spark-defaults.conf file in the form of property value.

- passing in config values through a spark-session

Additional Reference:

Introduction to HWC | CDP Private Cloud

Issue: Spark2 read managed tables issue via dbt

Description:

If a managed table is created in Hive and is accessed with Spark-livy, it may throw permission errors shown below:

12:48:21 Completed with 1 error and 0 warnings:

12:48:21

12:48:21 Runtime Error in model test2 (models/staging/test2.sql)

12:48:21 Database Error

12:48:21 Error while executing query:

12:48:21 Spark has no access to table `certified_data`.`test_hive_streaming2`. Clients can access this table only if

12:48:21 they have the following capabilities: CONNECTORREAD,HIVEFULLACIDREAD,HIVEFULLACIDWRITE,HIVEMANAGESTATS,HIVECACHEINVALIDATE,CONNECTORWRITE.

12:48:21 This table may be a Hive-managed ACID table, or require some other capability that Spark

12:48:21 currently does not implement;

12:48:21

12:48:21 Done. PASS=0 WARN=0 ERROR=1 SKIP=0 TOTAL=1

Resolution:

This issue can be resolved by adding the properties in spark configuration:

spark.kryo.registrator=com.qubole.spark.hiveacid.util.HiveAcidKyroRegistrator

spark.sql.extensions=com.qubole.spark.hiveacid.HiveAcidAutoConvertExtension

spark.sql.hive.hwc.execution.mode=spark

spark.driver.extraClassPath=local:/opt/cloudera/parcels/CDH/lib/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.7.1.8.0-801.jar

spark.executor.extraClassPath=local:/opt/cloudera/parcels/CDH/lib/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.7.1.8.0-801.jar

Additional Reference:

Introduction to HWC | CDP Private Cloud

Issue: SSL certificate failure or Invalid certificate error

Description:

While using the spark-cde and spark-livy adapter with a warehouse that uses a self-sign certificate, while running dbt debug and other dbt commands you may get an error as shown below :

certificate verify failed: self-signed certificate

Resolution:

To disable SSL certificate verification, in the profile file for the adapter you can set the Additional variable as shown below:

verify_ssl_certificate: false

Issue: Permission issue while creating table

Description:

dbt user while running dbt command may see the error as shown below, this error occurs when user doesn't have CREATE TABLE permissions:

dbt run

11:57:43 Running with dbt=1.1.0

11:57:43 Found 1 model, 0 tests, 0 snapshots, 0 analyses, 208 macros, 0 operations, 0 seed files, 0 sources, 0 exposures, 0 metrics

11:57:43

11:59:02 Encountered an error:

Runtime Error

Runtime Error

Database Error

Error while executing query: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Permission denied: user [airflow.bigdata] does not have [WRITE] privilege on [[hdfs://dlhdfs/warehouse/tablespace/external/hive/test_dbt.db, hdfs://dlhdfs/warehouse/tablespace/external/hive/test_dbt.db/]]);

Resolution:

Check the dbt logs to understand what permissions are needed for different tables, and get those permissions to the user.

Additional Reference:

What privileges does my database user need to use dbt? | dbt Developer Hub

Issue: Latency while executing dbt commands with dbt-spark-cde adapter

Description:

While running dbt commands using a dbt-spark-cde adapter, we have observed unpredictable latency while completing the execution of dbt commands.

Reason:

We did a bunch of work on improving the performance of the cde adapter and have added instrumentation to understand where the latencies are.

There are several factors that cause latencies in CDE

- Load on cluster impacts when the spark job is picked up/scheduled for run

- Use of polling mechanisms in intervals to check job status (success/failure) after a job is submitted.

- Use of a sleep interval(40 secs currently) to check the output of a job run after job success/failure. This is where CDE aggregates logs and results.

- No feature in CDE to have a long-running spark session. Each single spark SQL query from dbt creates a new spark session.

Running any SQL query in CDE has the following steps:

- Generate a job name.

- Create a resource

- Upload the resource.

- Submit the job

- Run the job

- Fetch the job results from log

- Clean the resources

Explanation with an example:

Taking GitHub - cloudera/dbt-spark-cde-example as an example dbt project, here’s the breakdown of the CDE’s steps for the two most common dbt commands:

dbt debug

This command executes the following SQL query:

- select 1 as id

Breakdown as per CDE’s steps:

| Step name | Time taken (seconds) | Log | |

| 1 | Generate a job name. | 0 | 07:00:46.778564 Job created with id: |

| 2 | Create a resource | 1 | 07:00:46.779102 Create resources: files |

| 3 | Upload the resource. | 0 | 07:00:47.178924 Upload resource: SQL resource: |

| 4 | Submit the job | 1 | 07:00:47.929512 Submit job |

| 5 | Run the job (and wait for the job to change state from start to succeed/fail) | 62 | 07:00:48.347828 Run job |

| 6 | Fetch the job results from log (after waiting for the job logs to be aggregated) | 82 | 07:01:50.545694 Get job output |

| 7 | Clean the resources | 0 | 07:03:12.060899 Delete job |

| Total | 146 |

NOTE: Typically, dbt run executes all the models inside the dbt project. For sake of simplicity, we have only executed a single model (i.e. /models/staging/covid/stg_covid__cases.sql)

dbt run

We will consider running dbt run on a single model by running the command dbt run --select /models/staging/covid. This command executes the following SQL statements, as dbt-core tries to build a DAG before it starts to build the model. The first 5 statements are the “fixed cost” for any dbt run. If there are multiple models, there will only be one invocation of the first 5 statements. The latency of the dbt run command is then determined by the number of models and the time taken for each model.

1. show databases

2. show table extended in spark_cde_demo_reference like '*'

3. show table extended in spark_cde_demo_mart_covid like'*'

4. show table extended in spark_cde_demo like '*'

5. show table extended in spark_cde_demo_staging_covid like '*'

6. create or replace view spark_cde_demo_staging_covid.stg_covid__cases

as

select

cast(cast(unix_timestamp(date_rep,"dd/MM/yyyy") as timestamp) AS DATE) as date_rep,

cast(cases as BIGINT) as cases,

cast(deaths as BIGINT) as deaths,

geo_id

from spark_cde_demo.raw_covid_casesFor each statement, the breakdown as per CDE’s steps can be obtained from the dbt logs.

Let’s consider the execution of the last query in CDE, the breakdown as per CDE’s steps:

| Step name | Time taken (seconds) | Log | |

| 1 | Generate a job name. | 0 | 07:27:39.914554 Job created with id: |

| 2 | Create a resource | 1 | 07:27:39.915082 Create resources: files |

| 3 | Upload the resource. | 0 | 07:27:40.213107 Upload resource: |

| 4 | Submit the job | 1 | 07:27:40.913892 Submit job |

| 5 | Run the job (and wait for the job to change state from start to succeed/fail) | 62 | 07:27:41.223755 Run job |

| 6 | Fetch the job results from log (after waiting for the job logs to be aggregated) | 82 | 07:28:43.849548 Get job output |

| 7 | Clean the resources | 0 | 07:30:05.096386 Delete job |

| Total | 146 |

Conclusion:

Since each query follows the aforementioned 7 steps, to execute each query in the above example CDE will take similar time. This means to execute a single dbt model on average it takes

6 * 146(average time to execute each query) = 876 seconds or 14.6 minutes |

If the dbt model is complex or if multiple dbt models are involved in a single dbt command this latency increases accordingly.

Issue: Missing yarn.env file in current working directory

Description:

While running dbt commands on yarn, we have seen this error where commands are not issued from correct directory which is containing yarn.env file.

Assuming your dbt project directory path is ~/my-project-dir/sample-dbt-project .

Your working directory to issue yarn commands is ~/my-project-dir/

Resolution:

Create your yarn.env file in the location ~/my-project-dir

Issue: Command '['tar', '-zcf', '/tmp/dbt-workspace.tar.gz', 'xxx']' returned non-zero exit status 2

Description:

While running dbt commands on yarn, we have seen this error where commands are not issued from correct directory which is containing yarn.env file.

Assuming your dbt project directory path is ~/my-project-dir/sample-dbt-project .

Your working directory to issue yarn commands is ~/my-project-dir/

Resolution:

- Check the current working directory where you are running the command. It should be immediately outside of the dbt project . In this case ~/my-project-dir/

- Check the DBT_PROJECT_NAME variable in yarn.env. In this case it should be DBT_PROJECT_NAME=sample-dbt-project and not xxx

Issue: Troubleshooting Connection with Warehouse

Description:

Usually dbt fails to connect to warehouse in case of wrong values in profiles.yml

A sample profiles file(profiles.yml) to connect to hive warehouse is:

dbt_hive_demo:

outputs:

dev:

auth_type: kerberos

host: tsenapati-3.tsenapati.root.hwx.site

port: 10000

schema: dbt_hive_demo

threads: 4

type: hive

use_http_transport: false

use_ssl: false

kerberos_service_name: hive

target: dev

Resolution:

If connection fails try troubleshooting without yarn/dbt involved. Follow the steps below:

- Installing the package:

pip install impyla - Here’s a sample script to test connection to hive warehouse for profiles file above, Create a test file connectiontest.py with following content:

from impala.dbapi import connect

conn = connect(

host = "host.name",

port = portnumber,

auth_mechanism = "GSSAPI",

use_ssl = False,

use_http_transport = False,

kerberos_service_name="hive")

cursor = conn.cursor()

cursor.execute("SHOW DATABASES")

res = cursor.fetchall()

print(res)

cursor.close()

conn.close() - Modify values with actual values from your host and run the command:

python3 connectiontest.py

If the above script succeeds and shows sample databases, then your connection parameters are correct. Populate the same in profiles.yml accordingly.