Community Articles

- Cloudera Community

- Support

- Community Articles

- dbt on yarn in Cloudera Data Platform

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-17-2022 12:00 AM - edited 06-26-2023 04:51 AM

Overview

Cloudera has implemented dbt adapters for Hive, Spark, and Impala. In addition to providing the adapters, Cloudera is also offering dbt capabilities to our on-prem customers (CDP PvC-Base). Our solution satisfies our customers' stringent security and privacy requirements while providing an easy-to-use turnkey solution for practitioners.

In this document, we will show how users can run dbt on YARN without having to worry about anything else. We have packaged everything as a .tar.gz file.

If you are new to dbt, refer to the article to learn more about dbt.

Prerequisites

- The cluster should have Python 3.7+

- The user should have the ability to ssh into a gateway machine

- The user should have access to the git repo with the dbt models and has the ability to create PRs in that git repo with changes. If you are creating your own repo as an analyst, refer to this Getting started with dbt Core

- The user SSH key can be configured for access to the git repo

Compatibility

- >= CentOS 7 x86_x64

- >= CDP 7.1.8

Setting up dbt on yarn

We have created a dbt deployment package to simplify the setup process for dbt on yarn. We will only have to deploy this package on the cluster gateway machine. We will also make sure that we dont have a need to deploy packages locally on any of the worker nodes. Our deployment will take care of it too.

Step 1. Login to your base cluster

SSH to your gateway machine by running the following command:

ssh <gateway machine>

Step 2. Download and install the dbt deployment package

- Download the cloudera-dbt-deployment package

mkdir </path/to/working/dir>

Sample terminal output

cd </path/to/working/dir>

wget https://github.com/cloudera/cloudera-dbt-deployment/releases/download/Prerelease/cloudera-dbt-deployment-1.2.0.tar.gz - Create a python venv and install the package

python3 -m venv <Virtual Environment Name>

Sample terminal output

source <Virtual Environment Name>/bin/activate

python3 -m pip install cloudera-dbt-deployment-1.2.0.tar.gz

Step 3: Download the python packages for all of the dbt runtime (for airgapped environments)

We have packaged dbt core and all the dbt adapters we support into a single deployable package. This package will get updated on a regular basis whenever the underlying python packages are updated.

We will need to download this package and upload to HDFS.

command:

wget https://github.com/cloudera/cloudera-dbt-deployment/releases/download/Prerelease/Centos7_x86_x64_dbt...

hdfs dfs -copyFromLocal Centos7_x86_x64_dbt_dependencies.tar.gz hdfs://path/to/dependencies

At this point, we are ready to work with specific dbt projects.

Setup yarn for a specific dbt project

Step 1: Clone the dbt project

We will use a sample dbt project from this repo. If you would like to start a new dbt project from scratch, refer to Getting started with dbt Core | dbt Developer Hub. Clone the sample project by running the following command:

git clone https://github.com/cloudera/dbt-hive-example.git

Step 2: Create the yarn.env file

Create environment variables for the dbt project in your working directory by running the following command. This file will be used by the yarn_dbt command. We need to create this file in the folder where the dbt project lives.

cd </path/to/dbt-project>

vi yarn.env

Make sure the set the following variables in the file:

- DEPENDENCIES_PACKAGE_LOCATION

- YARN_JAR

- DBT_SERVICE_USER

- DBT_PROJECT_NAME

- YARN_RM_URI

- DBT_HEADLESS_KEYTAB

- DBT_HEADLESS_PRINCIPAL

DEPENDENCIES_PACKAGE_PATH_HDFS=/tmp

DEPENDENCIES_PACKAGE_NAME=Centos7_x86_x64_dbt_dependencies.tar.gz

YARN_JAR=/opt/cloudera/parcels/CDH/lib/hadoop-yarn/hadoop-yarn-applications-distributedshell.jar

DBT_SERVICE_USER=hive

DBT_PROJECT_NAME=dbt_hive_demo

YARN_RM_URI=http://hajmera-1.vpc.cloudera.com:8088

DBT_HEADLESS_KEYTAB=/cdep/keytabs/systest.keytab

DBT_HEADLESS_PRINCIPAL=systest@VPC.CLOUDERA.COM

CURRENT_DBT_USER=systest

DBT_DOCS_PORT=7777

YARN_CONTAINER_MEMORY=2048

YARN_TIMEOUT=1200000

APPLICATION_TAGS="cia-user-ha-testing.dbt-debug"

Refer to the table below to understand environment variables:

Key | Sample values | Notes |

DEPENDENCIES_PACKAGE_PATH_HDFS | /tmp | Path in hdfs containing the tarball with all python packages needed for dbt for an offline install. Downloaded in previous section. |

| DEPENDENCIES_PACKAGE_NAME | e.g. Centos7_x86_x64_dbt_dependencies.tar.gz, | Name of package in hdfs for offline install |

YARN_JAR | e.g: /opt/cloudera/parcels/CDH/lib/hadoop-yarn/hadoop-yarn-applications-distributedshell.jar | Distributed Shell Jar path for YARN job execution. This needs to be changed based on the CDP Base version. |

DBT_SERVICE_USER | e.g: hive | Service user with access to YARN resources. This user’s key tab is distributed through cloudera SCM and can be found in location /var/run/cloudera-scm-agent/process/ |

DBT_PROJECT_NAME | e.g: dbt_hive_demo | Project name |

DBT_HEADLESS_KEYTAB | e.g: We use keytab for systest user found at path /cdep/keytabs/systest.keytab | A headless key tab corresponding to a POSIX user that can start services without prompting for password. E.g. hdfs,hbase,... |

DBT_HEADLESS_PRINCIPAL | e.g: | Kerberos Principal for above dbt headless keytab |

CURRENT_DBT_USER | systest | Logged in user in the session with valid keytab |

DBT_DOCS_PORT | 7777 | Port where dbt docs are hosted |

YARN_CONTAINER_MEMORY | 2048 | Memory allocation for YARN container in mb |

YARN_TIMEOUT | 1200000 | Time out for YARN container in milliseconds |

APPLICATION_TAGS | cia-user-ha-testing.dbt-debug | Prefix/identifier for YARN application, can be seen in YARN-UI |

Step 3: Create the dbt profiles.yml file

We will be using the Kerberos method to connect to the query engines. So, the profiles.yml file should reflect it. Edit the profiles.yml file as per warehouse configurations by running the following command:

cd /path/to/dbt-models

vi profiles.yml

dbt_hive_demo:

outputs:

dbt_hive_demo:

auth_type: kerberos

host: hajmera-1.vpc.cloudera.com

port: 10000

schema: dbt_hive_demo

threads: 2

type: hive

use_http_transport: false

use_ssl: false

kerberos_service_name: hive

target: dbt_hive_demo

Step 4: Run kinit to get the authentication token

Provide an authentication token to execute dbt by running the following command:

kinit -kt </path/to/keytab/file> <username>

Work with the dbt project using yarn_dbt

In the example repo, we have a sample dbt project called ‘dbt_hive_demo’.

Inside this demo project, we can issue dbt commands to run parts of the project. The demo project contains examples of the following operations and commands supported with YARN deployment:

- Connection test: dbt debug

- Loading reference data: dbt seed

- Data validation: dbt test

- Running transformation: dbt run

- Accessing documentation: dbt docs

More commands and a detailed description of each command can be found here.

| Note: yarn_dbt commands needs to be run in the same folder that yarn.env is located. |

Step 1. Test the connection to the warehouse

To ensure we’ve configured our profile correctly, test the connection by running the following command:

yarn_dbt debug

Step 2. Loading reference data

Load the reference dataset to the warehouse by running the following command:

yarn_dbt seed

Step 3. Validate reference data

Our Seeds are configured with a couple of tests. Users can read more about it here

We also have a custom test created in dbt_hive_demo/tests/generic/test_length.sql. This test is used to check the character length of a column. Our reference data includes ISO Alpha2 and Alpha3 country codes - we know these columns should always be 2 or 3, respectively. To ensure that our reference data is high quality, we can use dbt to test these assumptions and report the results. We expect that Alpha2 and Alpha3 columns are the correct lengths and that no fields should be null. Test the dataset by running the following command:

yarn_dbt test

Step 4. Run the transformation

We have 3 sets of models in this demo project.

- Firstly, we have raw. Our raw models make use of Sources. This is data already in our database that dbt needs to refer to. This is the fake data we loaded earlier. Our raw models are defined in models/raw/covid

- Next, we have staging. Our staging models use the source() method to refer to the Sources we defined in our raw models. The staging models are intermediate views created over our raw data to handle some basic type conversion. These are materialized as views, and we don't expect our end users to query the staging models. Our staging models are defined in models/staging/covid

- Lastly, we have mart. Our mart models use the ref() method to refer to the staging models and reference seeds we created using dbt. We use the staging views to handle most of the logic for typecasting, but we do some renaming here to make our models easier for users to understand. These models are materialized as tables, as this gives greater performance for user queries. We can use incremental models to make the building of the model more performant. Our mart models are defined in models/mart/covid

Execute all the transformations by running the following command:

yarn_dbt run

Step 5. Host and access the documentation

- To generate and host the documentation on the gateway machine, run the following command:

yarn_dbt docs

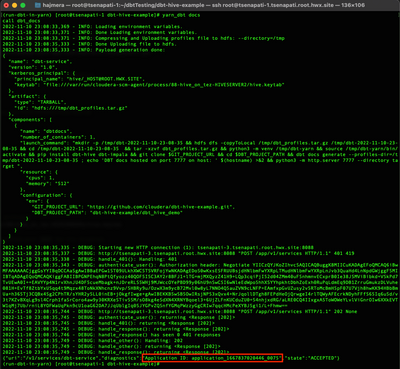

Sample terminal output - To access the documentation, copy the Application ID from the above output.

and run the following command:yarn logs -applicationId <application-id> | grep 7777Sample terminal output - Copy the host address and add the :7777 port to it. Paste the following URL to your browser:

Sample URL:hajmera-3.vpc.cloudera.com:7777

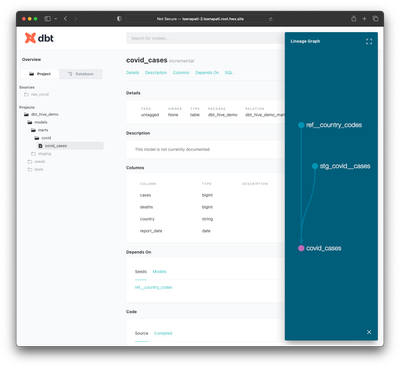

- Hosted documentation looks like this:

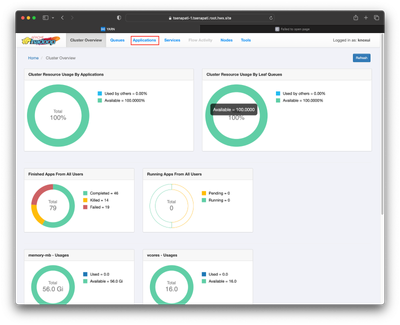

Accessing logs in the yarn UI

In order to debug issues further, you can view the logs in the yarn UI.

- Find the yarn application id from the terminal

- Go to YARN-UI for the cluster, URL looks similar to:

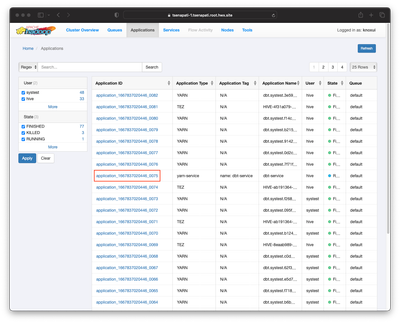

- Click on the Application tab and locate the ApplicationID:

- Click on Application ID and click on Logs:

Schedule dbt models

dbt models can be scheduled using any workflow management platform like Apache Airflow or Apache Oozie.

Conclusion

In this document, we have shown the different requirements that need to be met to support the full software development life cycle of dbt models. The table below shows how those requirements have been met.

Requirement | Will this option satisfy the requirement? If yes, how? |

Have a dev setup where different users can do the following (in an isolated way):

| Yes, per user can log into gateway machine, having checked out their own branch of the given dbt project codebase. |

| Yes |

| Yes |

| Yes |

Have a CI/CD pipeline to push committed changes in the git repo to stage/prod environments | Yes, simple git-push and delegating to external CI/CD system |

See logs in stage/prod of the dbt runs | Yes |

See dbt docs in stage/prod | Yes |

Support isolation across different users using it in dev | Yes, each session is isolated and use their own login credentials |

Be able to upgrade the adapters or core seamlessly | Cloudera will publish new github assets, under github releases. |

Ability to run dbt run regularly to update the models in the warehouse | Yes, via any scheduler like Apache Airflow |

Facing issues while working with dbt? We have troubleshooting guide, to help you resolve issues.

You can also reach out to innovation-feedback@cloudera.com if you have any questions.