Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Using nifi & pyspark to move & transform data ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Using nifi & pyspark to move & transform data on S3 - examples and resources

- Labels:

-

Apache NiFi

Created on 04-21-2018 11:13 AM - edited 09-16-2022 06:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey all,

After some information on how I can use nifi to get a file on S3 send it to pyspark, transform it and move it to another folder in a different bucket.

I have used this template https://gist.github.com/ijokarumawak/26ff675039e252d177b1195f3576cf9a to get data moving between buckets, which works fine.

But im a bit unsure of the next steps of how to pass a file to pyspark, run a script to transform it then put it in another location. I have been looking at this https://pierrevillard.com/2016/03/09/transform-data-with-apache-nifi/ which I will try to understand.

If you know of or have any examples of how I might do this, or could describe how I might set it up

Thanks,

Tim

Created on 04-23-2018 09:03 PM - edited 08-17-2019 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

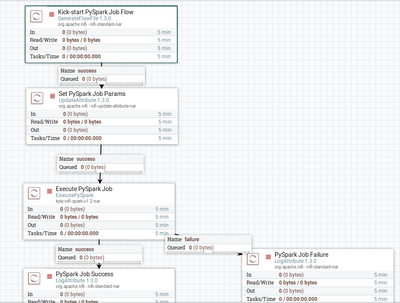

Hello. Using this template https://github.com/Teradata/kylo/blob/master/samples/templates/nifi-1.0/template-starter-pyspark.xml I managed to get a pyspark job running.

The spark script doesn't accept data from a flow file however, it has a hardcoded path for the input and output file. I had to tell spark to use a specific anaconda python environment in spark setting PYSPARK_PYTHON as follows "export PYSPARK_PYTHON="/path/to/python/env/python" in the spark conf/spark-env.sh file.

It would be nice to know how to how to create a script and template that accepts flowfiles however. If anyone has a template with an example of that would be great.

Cheers,

Tim

Created 04-23-2018 09:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would recommend trying the Apache NiFi executesparkinteractive processor

Created 04-23-2018 09:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I did see that but it looked a bit hard to follow how to do it from scratch