Community Articles

- Cloudera Community

- Support

- Community Articles

- Apache Deep Learning 101: Using Apache MXNet on an...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-23-2018 06:47 PM - edited 08-17-2019 08:46 AM

This is for people preparing to attend my talk on Deep Learning at DataWorks Summit Berling 2018 (https://dataworkssummit.com/berlin-2018/#agenda) on Thursday April 19, 2018 at 11:50AM Berlin time.

This is for running Apache MXNet on an HDF 3.1 node with Centos 7.

Let's get this installed!

git clone https://github.com/apache/incubator-mxnet.git

The installation instructions at Apache MXNet's website (http://mxnet.incubator.apache.org/install/index.html) are amazing. Pick your platform and your style. I am doing this the simplest way on Linux path.

We need to install OpenCV to handle images in Python. So we install that and all the build tools that OpenCV requires to build it and Apache MXNet.

HDF 3.1 / Centos 7 Setup

sudo yum groupinstall 'Development Tools' -y sudo yum install cmake git pkgconfig -y sudo yum install libpng-devel libjpeg-turbo-devel jasper-devel openexr-devel libtiff-devel libwebp-devel -y sudo yum install libdc1394-devel libv4l-devel gstreamer-plugins-base-devel -y sudo yum install gtk2-devel -y sudo yum install tbb-devel eigen3-devel -y pip install numpy cd ~ git clone https://github.com/Itseez/opencv.git cd opencv git checkout 3.1.0 git clone https://github.com/Itseez/opencv_contrib.git cd opencv_contrib git checkout 3.1.0 cd ~/opencv mkdir build cd build cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local -D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib/modules -D INSTALL_C_EXAMPLES=OFF -D INSTALL_PYTHON_EXAMPLES=ON -D BUILD_EXAMPLES=ON -D BUILD_OPENCV_PYTHON2=ON .. sudo make sudo make install sudo ldconfig

Local Centos 7 Run Script

python -W ignore analyzex.py $1

Python

https://github.com/tspannhw/ApacheBigData101/blob/master/analyzex.py

See Part 1: https://community.hortonworks.com/articles/171960/using-apache-mxnet-on-an-apache-nifi-15-instance-w...

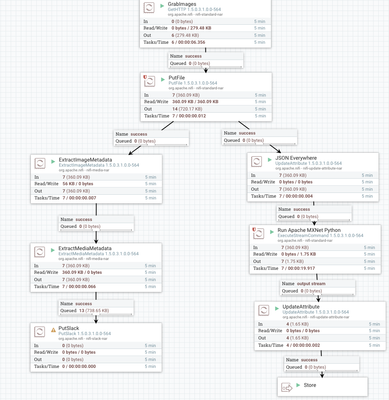

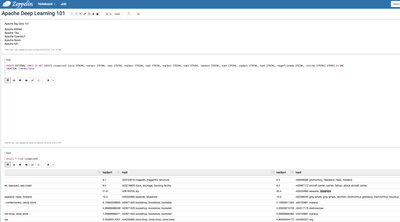

Apache NiFi Flow

This first flow retrieves images from the picsum.photos API, stores it locally and then runs some basic processing. The first branch extracts all the metadata we can. The second branch will call our example Inception Apache MXNet Python script for image recognition. The script returns a JSON file that we will process with the same processing code that is used by the local version of this program.

Once we funnel that out our process group, we send it to the MXNet processing group which will convert the JSON to Apache AVRO and then to Apache ORC for storage in HDFS to be used as an external Apache Hive table.

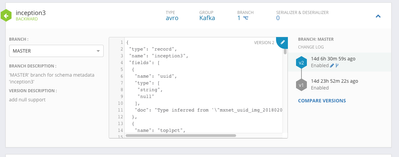

Our Schema hosted in Hortonworks Schema Registry

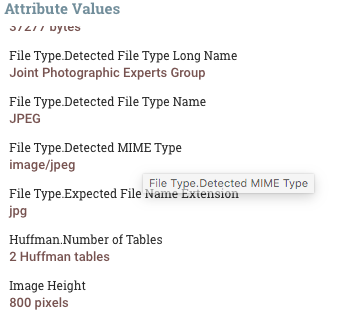

Examining The Picture with ExtractMedia...

To Execute Apache MXNet Installed on HDF Node

An Example Image Loaded From the API

Exploring the data with Apache Hive SQL in Apache Zeppelin on HDP 2.6.4

An Example Unsplash Image

Apache MXNet Caption: Lakeshore

Resources:

https://github.com/tspannhw/ApacheDeepLearning101

Images REST API Provided by PicSum (Digital Ocean + Beluga CDN)

https://picsum.photos/600/800/?random

https://belugacdn.com/?ref=picsum.photos

https://www.digitalocean.com/?ref=picsum.photos

Images Provided By Unsplash

Created on 05-17-2018 12:55 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

sudo apt-get update

sudo apt-get install -y wget python gcc

wget https://bootstrap.pypa.io/get-pip.py && sudo python get-pip.py

sudo apt-get install graphviz

pip install graphviz

pip install mxnet --pre

Created on 05-17-2018 01:19 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content